library(osfr) # Interact with OSF via R

# Make a "models" folder if it doesn't exist already

if (!file.exists("models")) { dir.create("models") }

# Download model_minivans_mega_mlm_brms.rds from OSF

osf_retrieve_file("https://osf.io/zp6eh") |>

osf_download(path = "models", conflicts = "overwrite", progress = TRUE)I recently posted a guide (mostly for future-me) about how to analyze conjoint survey data with R. I explore two different estimands that social scientists are interested in—causal average marginal component effects (AMCEs) and descriptive marginal means—and show how to find them with R, with both frequentist and Bayesian approaches.

However, that post is a little wrong. It’s not wrong wrong, but it is a bit oversimplified.

When political scientists, psychologists, economists, and other social scientists analyze conjoint data, they overwhelmingly do it with ordinary least squares (OLS) regression, or just standard linear regression (lm(y ~ x) in R; reg y x in Stata). Even if the outcome is binary, they’ll use OLS and call it a linear probability model. The main R package for working with conjoint data in a frequentist way ({cregg}) uses OLS and linear probability models. Social scientists (and economists in particular) adore OLS.

In my earlier guide, I showed how to analyze the data with logistic regression, but even that is still overly simplified. In reality, conjoint choice-based experiments are more complex than what regular old OLS regression—or even logistic regression—can handle (though I’m sure some econometrician somewhere has a proof showing that OLS works just fine for multinomial conjoint data :shrug:).

A recent paper published in Political Science Research and Methods (Jensen et al. 2021) does an excellent job explaining the problem with using plain old OLS to estimate AMCEs and marginal means with conjoint data (access the preprint here). Their main argument boils down to this: OLS throws away too much useful information about (1) the relationships and covariance between the different combinations of feature levels offered to respondents, and (2) individual-specific differences in how respondents react to different feature levels.

Jensen et al. explain three different approaches to analyzing data that has a natural hierarchical structure like conjoint data (where lots of choices related to different “products” are nested within individuals). This also is the same argument from chapter 15 of Bayes Rules!.

-

Pooled data (“no room for individuality”): The easiest way to deal with individual-specific effects is to not to. Lump each choice by each respondent into one big dataset, run OLS on it, and be done. Believe it or not, linear regression right away.

However, this erases all individual-level heterogeneity and details about how different feature levels interact with individual characteristics.

-

No pooling (“every person for themselves”): An alternative way to deal with individual-specific effects is to run a separate model for each individual. If 800 people participated in the experiment, run 800 different models. That way you can perfectly model the relationship between each individual’s characteristics (political party, age, income, education, etc.) with their choices and preferences.

This sounds neat, but has substantial issues. It’ll only show an identified causal effect if each respondent saw every combination of conjoint features at least once. In the candidate experiment from my previous post, there were 8 features with different counts of attributes: 2 × 6 × 6 × 6 × 2 × 6 × 6 × 6 = 186,624 possibilities. Every respondent would need to see each of the nearly 200,000 options. lol.

-

Partial pooling (“a welcome middle ground”): Jensen et al.’s solution is to use hierarchical models that allow individual-level characteristics to inform choice-level decisions. As Bayes Rules! says:

[T]hough each group is unique, having been sampled from the same population, all groups are connected and thus might contain valuable information about one another.

In this kind of model, we want individual-level characteristics like age, income, education, etc. to inform the decision to choose specific outcomes and interact with different feature levels in different ways. Not every respondent needs to have seen all 200,000 options—information about respondents with similar characteristics facing similar sets of choices gets shared because of the hierarchical-ness of the model.

For the best overviews I’ve found of how multilevel models work, check out these two resources:

- Chapters 15–17 in Bayes Rules! (especially chapter 17)

- Michael Clark’s Mixed Models with R guide

I also have a guide here, but it’s nowhere near as good as those ↑

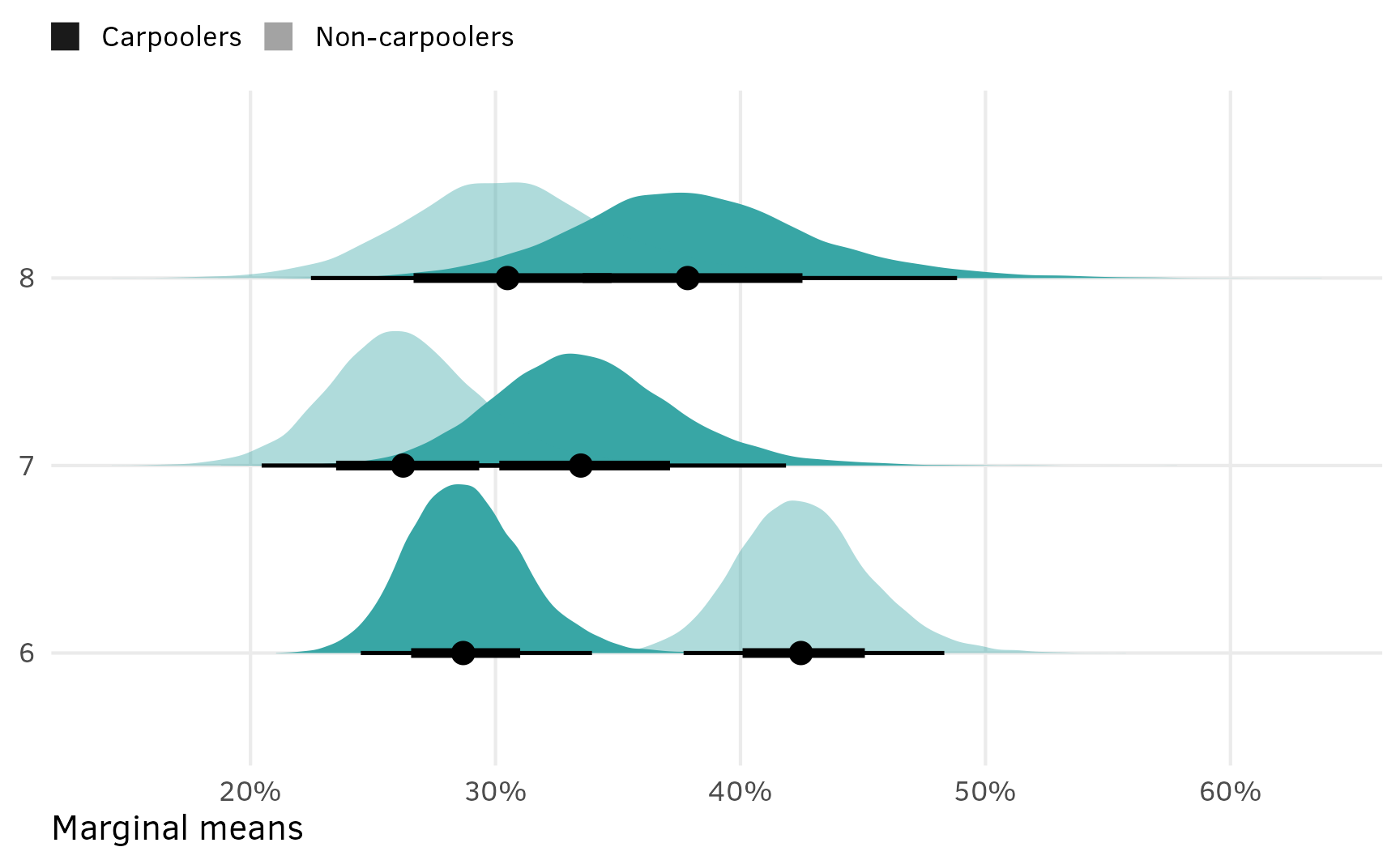

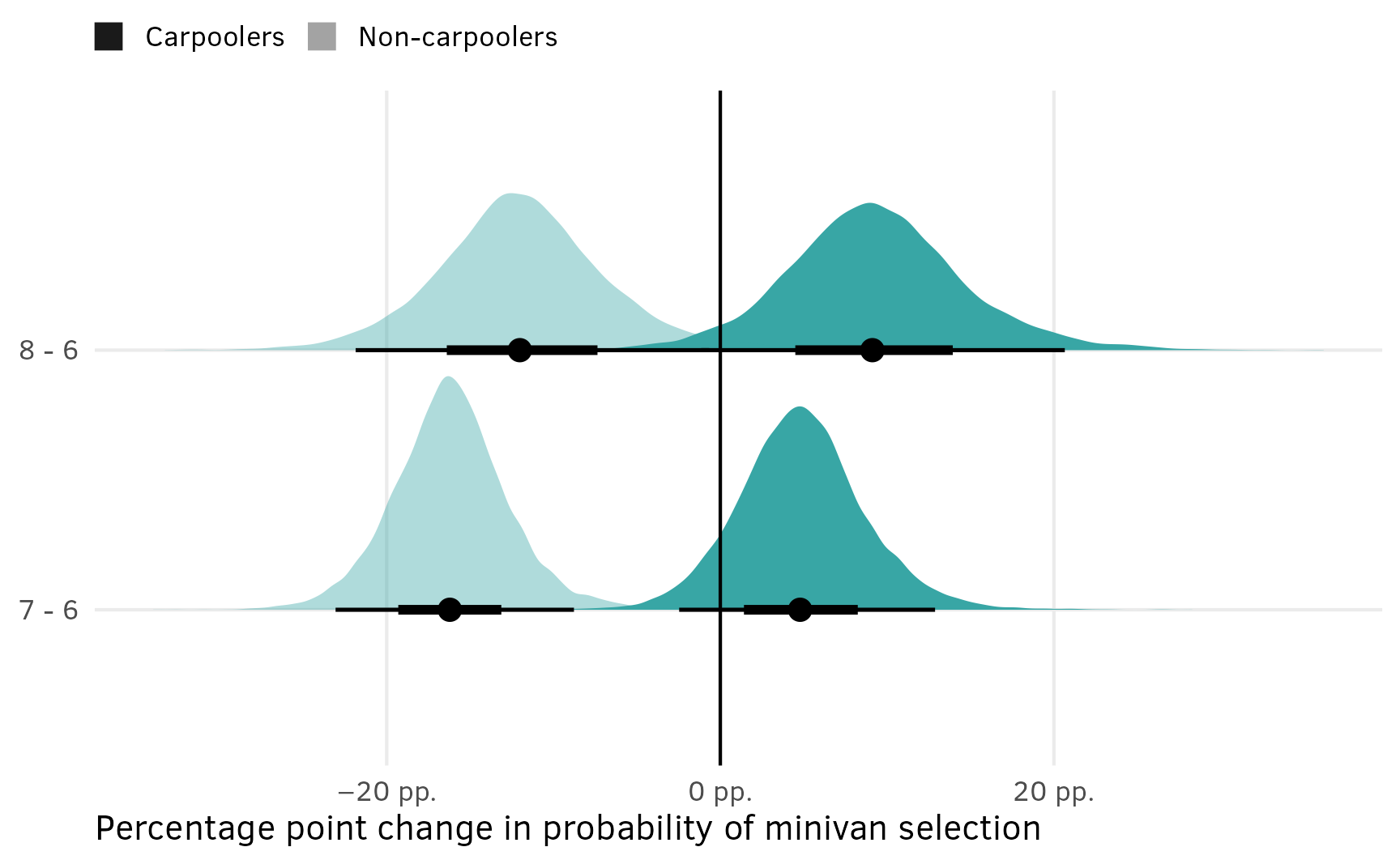

By using a multilevel hierarchical model, Jensen et al. (2021) show that we can still find AMCEs and causal effects, just like in my previous guide, but we can take advantage of the far richer heterogeneity that we get from these complex statements. We can make cool statements like this (in an experiment that varied policies related to unions):

On average, Democrats are \(x\) percentage points more likely than demographically similar Republicans to support a plan that includes expanding union power, relative to the status quo.

Using hierarchical models for conjoint experiments in political science is new and exciting and revolutionary and neat. That’s the whole point of Jensen et al.’s paper—it’s a call to stop using OLS for everything.

I’ve been working on a conjoint experiment with my coauthors Marc Dotson and Suparna Chaudhry. Suparna and I are political scientists and this multilevel stuff in general is still relatively new and wildly underused in the discipline. Marc, though, is a marketing scholar. The marketing world has been using hierarchical models for conjoint experiments for a long time and it’s standard practice in that discipline. There’s a whole textbook about the hierarchical model approach in marketing (Chapman and Feit 2019), and these fancy conjoint multilevel models are used widely throughout the marketing industry.

lol at political science, just now discovering this.

So, I need to expand my previous conjoint guide. That’s what this post is for.

Here’s what I assume you know:

- You’re familiar with R and the tidyverse (particularly {dplyr} and {ggplot2}).

- You’re familiar with linear regression and packages like {broom} for converting regression results into tidy data frames and {marginaleffects} for calculating marginal effects.

- You’re familiar with {brms} for running Bayesian regression models and {tidybayes} and {ggdist} for manipulating and plotting posterior draws. You don’t need to know how to write stuff in raw Stan.

I’ll do three things in this guide:

- Chocolate: Analyze a simple choice-based conjoint experiment where respondents only saw one set of options. This is so I can (1) explore the {mlogit} package, and (2) figure out how to work with multinomial models and predictions both frequentistly and Bayesianly.

- Minivans: Analyze a more complex choice-based conjoint experiment where respondents saw three randomly selected options fifteen times. I do this with both {mlogit} and {brms} to figure out how to work with true multinomial outcomes both frequentistly and Bayesianly.

- Minivans again: Analyze the same complex choice-based experiment but incorporate respondent-level characteristics into the estimates using a hierarchical or multilevel model. I only do this with {brms} because in reality I have no interest in working with {mlogit} (I only use it here as a sort of baseline for my {brms} explorations).

Throughout this example, I’ll use data from two different simulated conjoint choice experiments. You can download these files and follow along:

-

choco_candy.csv: This is simulated data for a hypothetical experiment about consumer preferences for candy features. It comes from p. 292 in Kuhfeld (2010), a SAS technical note about how to run multinomial models in SAS. Instead of copying/pasting from the PDF, I found a version of it here, cleaned up by Sangwoo Kim (who also has a YouTube tutorial using the same candy data) -

rintro-chapter13conjoint.csv: This is simultated data for a hypothetical experiment about consumer preferences for minivan features. It comes from chapter 13 in Chapman and Feit (2019) and is available from the book’s resources page (or directly from their data folder)

Additionally, in Part 3, I fit a huge Stan model with {brms} that takes ≈30 minutes to run on my fast laptop. If you want to follow along and not melt your CPU for half an hour, you can download an .rds file of that fitted model that I stuck in an OSF project. The code for brm() later in this guide will load the .rds file automatically instead of rerunning the model as long as you put it in a folder named “models” in your working directory. This code uses the {osfr} package to download the .rds file from OSF automatically and places it where it needs to go:

Let’s load some libraries, create some helper functions, load the data, and get started!

library(tidyverse) # ggplot, dplyr, and friends

library(broom) # Convert model objects to tidy data frames

library(parameters) # Show model results as nice tables

library(survey) # Panel-ish frequentist regression models

library(mlogit) # Frequentist multinomial regression models

library(dfidx) # Structure data for {mlogit} models

library(scales) # Nicer labeling functions

library(marginaleffects) # Calculate marginal effects

library(ggforce) # For facet_col()

library(brms) # The best formula-based interface to Stan

library(tidybayes) # Manipulate Stan results in tidy ways

library(ggdist) # Fancy distribution plots

library(patchwork) # Combine ggplot plots

library(rcartocolor) # Color palettes from CARTOColors (https://carto.com/carto-colors/)

# Custom ggplot theme to make pretty plots

# Get the font at https://github.com/intel/clear-sans

theme_nice <- function() {

theme_minimal(base_family = "Clear Sans") +

theme(panel.grid.minor = element_blank(),

plot.title = element_text(family = "Clear Sans", face = "bold"),

axis.title.x = element_text(hjust = 0),

axis.title.y = element_text(hjust = 1),

strip.text = element_text(family = "Clear Sans", face = "bold",

size = rel(0.75), hjust = 0),

strip.background = element_rect(fill = "grey90", color = NA))

}

theme_set(theme_nice())

clrs <- carto_pal(name = "Prism")

# Functions for formatting things as percentage points

label_pp <- label_number(accuracy = 1, scale = 100,

suffix = " pp.", style_negative = "minus")chocolate <- read_csv("data/choco_candy.csv") %>%

mutate(

dark = case_match(dark, 0 ~ "Milk", 1 ~ "Dark"),

dark = factor(dark, levels = c("Milk", "Dark")),

soft = case_match(soft, 0 ~ "Chewy", 1 ~ "Soft"),

soft = factor(soft, levels = c("Chewy", "Soft")),

nuts = case_match(nuts, 0 ~ "No nuts", 1 ~ "Nuts"),

nuts = factor(nuts, levels = c("No nuts", "Nuts"))

)

minivans <- read_csv("data/rintro-chapter13conjoint.csv") %>%

mutate(

across(c(seat, cargo, price), factor),

carpool = factor(carpool, levels = c("no", "yes")),

eng = factor(eng, levels = c("gas", "hyb", "elec"))

)

Part 1: Candy; single question; basic multinomial logit

The setup

In this experiment, respondents are asked to choose which of these kinds of candies they’d want to buy. Respondents only see this question one time and all possible options are presented simultaneously.

| A | B | C | D | E | F | G | H | |

|---|---|---|---|---|---|---|---|---|

| Chocolate | Milk | Milk | Milk | Milk | Dark | Dark | Dark | Dark |

| Center | Chewy | Chewy | Soft | Soft | Chewy | Chewy | Soft | Soft |

| Nuts | No nuts | Nuts | No nuts | Nuts | No nuts | Nuts | No nuts | Nuts |

| Choice |

The data

The data for this kind of experiment looks like this, with one row for each possible alternative (so eight rows per person, or subj), with the alternative that was selected marked as 1 in choice. Here, Subject 1 chose option E (dark, chewy, no nuts). There were 10 respondents, with 8 rows each, so there are 10 × 8 = 80 rows.

chocolate

## # A tibble: 80 × 6

## subj choice alt dark soft nuts

## <dbl> <dbl> <chr> <fct> <fct> <fct>

## 1 1 0 A Milk Chewy No nuts

## 2 1 0 B Milk Chewy Nuts

## 3 1 0 C Milk Soft No nuts

## 4 1 0 D Milk Soft Nuts

## 5 1 1 E Dark Chewy No nuts

## 6 1 0 F Dark Chewy Nuts

## 7 1 0 G Dark Soft No nuts

## 8 1 0 H Dark Soft Nuts

## 9 2 0 A Milk Chewy No nuts

## 10 2 0 B Milk Chewy Nuts

## # ℹ 70 more rowsThe model

Respondents were shown eight different options and asked to select one. While this seems like a binary yes/no choice that could work with just regular plain old logistic regression, we want to account for the features and levels in all the unchosen categories too. To do this, we can use multinomial logistic regression, where the outcome variable is an unordered categorical variable with more than two categories. In this case we have eight different possible outcomes: alternatives A through H.

Original SAS model as a baseline

I know nothing about SAS. I have never opened SAS in my life. It is a mystery to me.

I copied these results directly from p. 297 in SAS’s massive “Discrete Choice” technical note (Kuhfeld 2010).

I only have this SAS output here as a baseline reference for what the actual correct coefficients are supposed to be.

SAS apparently fits these models with proportional hazard survival-style models, which feels weird, but there’s probably a mathematical or statistical reason for it. You use PROC PHREG to do it:

proc phreg data=chocs outest=betas;

strata subj set;

model c*c(2) = dark soft nuts / ties=breslow;

run;It gives these results:

Choice of Chocolate Candies

The PHREG Procedure

Multinomial Logit Parameter Estimates

Parameter Standard

DF Estimate Error Chi-Square Pr > ChiSq

Dark Chocolate 1 1.38629 0.79057 3.0749 0.0795

Soft Center 1 -2.19722 1.05409 4.3450 0.0371

With Nuts 1 0.84730 0.69007 1.5076 0.2195Survival model

Ew, enough SAS. Let’s do this with R instead.

We can recreate the same proportional hazards model with coxph() from the {survival} package. Again, this feels weird and not like an intended purpose of survival models and not like multinomial logit at all—in my mind it is neither (1) multinomial nor (2) logit, but whatever. People far smarter than me invented these things, so I’ll just trust them.

model_chocolate_survival <- coxph(

Surv(subj, choice) ~ dark + soft + nuts,

data = chocolate,

ties = "breslow" # This is what SAS uses

)

model_parameters(model_chocolate_survival, digits = 4, p_digits = 4)

## Parameter | Coefficient | SE | 95% CI | z | p

## ----------------------------------------------------------------------

## dark [Dark] | 1.3863 | 0.7906 | [-0.16, 2.94] | 1.7535 | 0.0795

## soft [Soft] | -2.1972 | 1.0541 | [-4.26, -0.13] | -2.0845 | 0.0371

## nuts [Nuts] | 0.8473 | 0.6901 | [-0.51, 2.20] | 1.2279 | 0.2195The coefficients, standard errors, and p-values are identical to the SAS output! The only difference is the statistic: in SAS they use a chi-square statistic, while survival:coxph() uses a z statistic. There’s probably a way to make coxph() use a chi-square statistic, but I don’t care about that. I never use survival models and I’m only doing this to replicate the SAS output and it just doesn’t matter.

Poisson model

An alternative way to fit a multinomial logit model without resorting to survival models is to actually (mis?)use another model family. We can use a Poisson model, even though choice isn’t technically count data, because of obscure stats reasons. See here for an illustration of the relationship between multinomial and Poisson distributions; or see this 2011 Biometrika paper about using Poisson models to reduce bias in multinomial logit models. Richard McElreath has a subsection about this in Statistical Rethinking as well: “Multinomial in disguise as Poisson” (11.3.3). Or as he said over on the currently-walled-garden Bluesky, “All count distributions are just one or more Poisson distributions in a trench coat.”

To account for the repeated subjects in the data, we’ll use svyglm() from the {survey} package so that the standard errors are more accurate.

model_chocolate_poisson <- glm(

choice ~ dark + soft + nuts,

data = chocolate,

family = poisson()

)

model_parameters(model_chocolate_poisson, digits = 4, p_digits = 4)

## Parameter | Log-Mean | SE | 95% CI | z | p

## -------------------------------------------------------------------

## (Intercept) | -2.9188 | 0.8628 | [-4.97, -1.49] | -3.3829 | 0.0007

## dark [Dark] | 1.3863 | 0.7906 | [ 0.00, 3.28] | 1.7535 | 0.0795

## soft [Soft] | -2.1972 | 1.0541 | [-5.11, -0.53] | -2.0845 | 0.0371

## nuts [Nuts] | 0.8473 | 0.6901 | [-0.43, 2.38] | 1.2279 | 0.2195Lovely—the results are the same.

mlogit model

Finally, we can use the {mlogit} package to fit the model. Before using mlogit(), we need to transform our data a bit to specify which column represents the choice (choice) and how the data is indexed: subjects (subj) with repeated alternatives (alt).

We can then use this indexed data frame with mlogit(), which uses the familiar R formula interface, but with some extra features separated by |s

outcome ~ features | individual-level variables | alternative-level variablesIf we had columns related to individual-level characteristics or alternative-level characteristics, we could include those in the model—and we’ll do precisely that later in this post. (Incorporating individual-level covariates is the whole point of this post!)

Let’s fit the model:

model_chocolate_mlogit <- mlogit(

choice ~ dark + soft + nuts | 0 | 0,

data = chocolate_idx

)

model_parameters(model_chocolate_mlogit, digits = 4, p_digits = 4)

## Parameter | Log-Odds | SE | 95% CI | z | p

## -------------------------------------------------------------------

## dark [Dark] | 1.3863 | 0.7906 | [-0.16, 2.94] | 1.7535 | 0.0795

## soft [Soft] | -2.1972 | 1.0541 | [-4.26, -0.13] | -2.0845 | 0.0371

## nuts [Nuts] | 0.8473 | 0.6901 | [-0.51, 2.20] | 1.2279 | 0.2195Delightful. All the results are the same as the survival model and the Poisson model.

Bayesian model

We can also fit this model in a Bayesian way using {brms}. Stan has a categorical distribution family for multinomial models, and we’ll use it in the next example. For now, for the sake of simplicity, we’ll use a Poisson family, since, as we saw above, that’s a legal way of parameterizing multinomial distributions.

The data has a natural hierarchical structure to it, with 8 choices (for alternatives A through H) nested inside each of the 10 subjects.

We want to model candy choice (choice) based on candy characteristics (dark, soft, and nuts). We’ll use the subscript \(i\) to refer to individual candy choices and \(j\) to refer to subjects.

Since we can legally pretend that this multinomial selection process is actually Poisson, we’ll model it as a Poisson process that has a rate of \(\lambda_{i_j}\). We’ll model that \(\lambda_{i_j}\) with a log-linked regression model with covariates for each of the levels of each candy feature. To account for the multilevel structure, we’ll include subject-specific offsets (\(b_{0_j}\)) from the global average, thus creating random intercepts. We’ll specify fairly wide priors just because.

Here’s the formal model for all this:

\[ \begin{aligned} &\ \textbf{Probability of selection of alternative}_i \textbf{ in subject}_j \\ \text{Choice}_{i_j} \sim&\ \operatorname{Poisson}(\lambda_{i_j}) \\[10pt] &\ \textbf{Model for probability of each option} \\ \log(\lambda_{i_j}) =&\ (\beta_0 + b_{0_j}) + \beta_1 \text{Dark}_{i_j} + \beta_2 \text{Soft}_{i_j} + \beta_3 \text{Nuts}_{i_j} \\[5pt] b_{0_j} \sim&\ \mathcal{N}(0, \sigma_0) \qquad\qquad\quad \text{Subject-specific offsets from global choice probability} \\[10pt] &\ \textbf{Priors} \\ \beta_0 \sim&\ \mathcal{N}(0, 3) \qquad\qquad\quad\ \ \text{Prior for global average choice probability} \\ \beta_1, \beta_2, \beta_3 \sim&\ \mathcal{N}(0, 3) \qquad\qquad\quad\ \ \text{Prior for candy feature levels} \\ \sigma_0 \sim&\ \operatorname{Exponential}(1) \qquad \text{Prior for between-subject variability} \end{aligned} \]

And here’s the {brms} model:

model_chocolate_brms <- brm(

bf(choice ~ dark + soft + nuts + (1 | subj)),

data = chocolate,

family = poisson(),

prior = c(

prior(normal(0, 3), class = Intercept),

prior(normal(0, 3), class = b),

prior(exponential(1), class = sd)

),

chains = 4, cores = 4, iter = 2000, seed = 1234,

backend = "cmdstanr", threads = threading(2), refresh = 0,

file = "models/model_chocolate_brms"

)The results are roughly the same as what we found with all the other models—they’re slightly off because of random MCMC sampling.

model_parameters(model_chocolate_brms)

## # Fixed Effects

##

## Parameter | Median | 95% CI | pd | Rhat | ESS

## ----------------------------------------------------------------

## (Intercept) | -3.04 | [-5.07, -1.60] | 100% | 1.000 | 3598.00

## darkDark | 1.35 | [-0.05, 3.16] | 96.92% | 1.000 | 4238.00

## softSoft | -2.03 | [-4.35, -0.47] | 99.60% | 1.000 | 2867.00

## nutsNuts | 0.83 | [-0.40, 2.27] | 90.28% | 1.000 | 4648.00Predictions

In the SAS technical note example, they use the model to generated predicted probabilities of the choice of each of the options. In the world of marketing, this can also be seen as the predicted market share for each option. To do this, they plug each of the eight different different combinations of dark, soft, and nuts into the model and calculate the predicted output on the response (i.e. probability) scale. They get these results, where dark, chewy, and nuts is the most likely and popular option (commanding a 50% market share).

Choice of Chocolate Candies

Obs Dark Soft Nuts p

1 Dark Chewy Nuts 0.50400

2 Dark Chewy No Nuts 0.21600

3 Milk Chewy Nuts 0.12600

4 Dark Soft Nuts 0.05600

5 Milk Chewy No Nuts 0.05400

6 Dark Soft No Nuts 0.02400

7 Milk Soft Nuts 0.01400

8 Milk Soft No Nuts 0.00600We can do the same thing with R.

Frequentist predictions

{mlogit} model objects have predicted values stored in one of their slots (model_chocolate_mlogit$probabilities), but they’re in a weird non-tidy matrix form and I like working with tidy data. I’m also a huge fan of the {marginaleffects} package, which provides a consistent way to calculate predictions, comparisons, and slopes/marginal effects (with predictions(), comparisons(), and slopes()) for dozens of kinds of models, including mlogit() models.

So instead of wrangling the built-in mlogit() probabilities, we’ll generate predictions by feeding the model the unique combinations of dark, soft, and nuts to marginaleffects::predictions(), which will provide us with probability- or proportion-scale predictions:

preds_chocolate_mlogit <- predictions(

model_chocolate_mlogit,

newdata = datagrid(dark = unique, soft = unique, nuts = unique)

)

preds_chocolate_mlogit %>%

# predictions() hides a bunch of columns; forcing it to be a tibble unhides them

as_tibble() %>%

arrange(desc(estimate)) %>%

select(group, dark, soft, nuts, estimate, std.error, statistic, p.value)

## # A tibble: 8 × 8

## group dark soft nuts estimate std.error statistic p.value

## <chr> <fct> <fct> <fct> <dbl> <dbl> <dbl> <dbl>

## 1 F Dark Chewy Nuts 0.504 0.142 3.56 0.000373

## 2 E Dark Chewy No nuts 0.216 0.112 1.93 0.0540

## 3 B Milk Chewy Nuts 0.126 0.0849 1.48 0.138

## 4 H Dark Soft Nuts 0.0560 0.0551 1.02 0.309

## 5 A Milk Chewy No nuts 0.0540 0.0433 1.25 0.213

## 6 G Dark Soft No nuts 0.0240 0.0258 0.929 0.353

## 7 D Milk Soft Nuts 0.0140 0.0162 0.863 0.388

## 8 C Milk Soft No nuts 0.00600 0.00743 0.808 0.419Perfect! They’re identical to the SAS output.

We can play around with these predictions to describe the overall market for candy. Chewy candies dominate the market…

…and dark chewy candies are by far the most popular:

Bayesian predictions

{marginaleffects} supports {brms} models too, so we can basically run the same predictions() function to generate predictions for our Bayesian model. Magical.

When plugging values into predictions() (or avg_slopes() or any function that calculates predictions from a model), we have to decide how to handle the random subject offsets (\(b_{0_j}\)). I have a whole other blog post guide about this and how absolutely maddening the nomenclature for all this is.

By default, predictions() and friends will calculate predictions for subjects on average by using the re_formula = NULL argument. This estimate includes details from the random offsets, either by integrating them out or by using the mean and standard deviation of the random offsets to generate a simulated average subject. When working with slopes, this is also called a marginal effect.

We could also use re_formula = NA to calculate predictions for a typical subject, or a subject where the random offset is set to 0. When working with slopes, this is also called a conditional effect.

- Conditional predictions/effect = average subject =

re_formula = NA - Marginal predictions/effect = subjects on average =

re_formula = NULL(default), using existing subject levels or a new simulated subject

Again, see this guide for way more about these distinctions. In this example here, we’ll just use the default marginal predictions/effects (re_formula = NULL), or the effect for subjects on average.

The predicted proportions aren’t identical to the SAS output, but they’re close enough, given that it’s a completely different modeling approach.

preds_chocolate_brms <- predictions(

model_chocolate_brms,

newdata = datagrid(dark = unique, soft = unique, nuts = unique)

)

preds_chocolate_brms %>%

as_tibble() %>%

arrange(desc(estimate)) %>%

select(dark, soft, nuts, estimate, conf.low, conf.high)

## # A tibble: 8 × 6

## dark soft nuts estimate conf.low conf.high

## <fct> <fct> <fct> <dbl> <dbl> <dbl>

## 1 Dark Chewy Nuts 0.432 0.144 1.09

## 2 Dark Chewy No nuts 0.186 0.0424 0.571

## 3 Milk Chewy Nuts 0.110 0.0168 0.419

## 4 Dark Soft Nuts 0.0553 0.00519 0.279

## 5 Milk Chewy No nuts 0.0465 0.00552 0.219

## 6 Dark Soft No nuts 0.0230 0.00182 0.141

## 7 Milk Soft Nuts 0.0136 0.00104 0.0881

## 8 Milk Soft No nuts 0.00556 0.000326 0.0414Plots

Since predictions() returns a tidy data frame, we can plot these predicted probabilities (or market shares or however we want to think about them) with {ggplot2}:

p1 <- preds_chocolate_mlogit %>%

arrange(estimate) %>%

mutate(label = str_to_sentence(glue::glue("{dark} chocolate, {soft} interior, {nuts}"))) %>%

mutate(label = fct_inorder(label)) %>%

ggplot(aes(x = estimate, y = label)) +

geom_pointrange(aes(xmin = conf.low, xmax = conf.high), color = clrs[7]) +

scale_x_continuous(labels = label_percent()) +

labs(

x = "Predicted probability of selection", y = NULL,

title = "Frequentist {mlogit} predictions") +

theme(panel.grid.minor = element_blank(), panel.grid.major.y = element_blank())

p2 <- preds_chocolate_brms %>%

posterior_draws() %>% # Extract the posterior draws of the predictions

arrange(estimate) %>%

mutate(label = str_to_sentence(glue::glue("{dark} chocolate, {soft} interior, {nuts}"))) %>%

mutate(label = fct_inorder(label)) %>%

ggplot(aes(x = draw, y = label)) +

stat_halfeye(normalize = "xy", fill = clrs[7]) +

scale_x_continuous(labels = label_percent()) +

labs(

x = "Predicted probability of selection", y = NULL,

title = "Bayesian {brms} predictions") +

theme(panel.grid.minor = element_blank(), panel.grid.major.y = element_blank()) +

# Make the x-axis match the mlogit plot

coord_cartesian(xlim = c(-0.05, 0.78))

p1 / p2

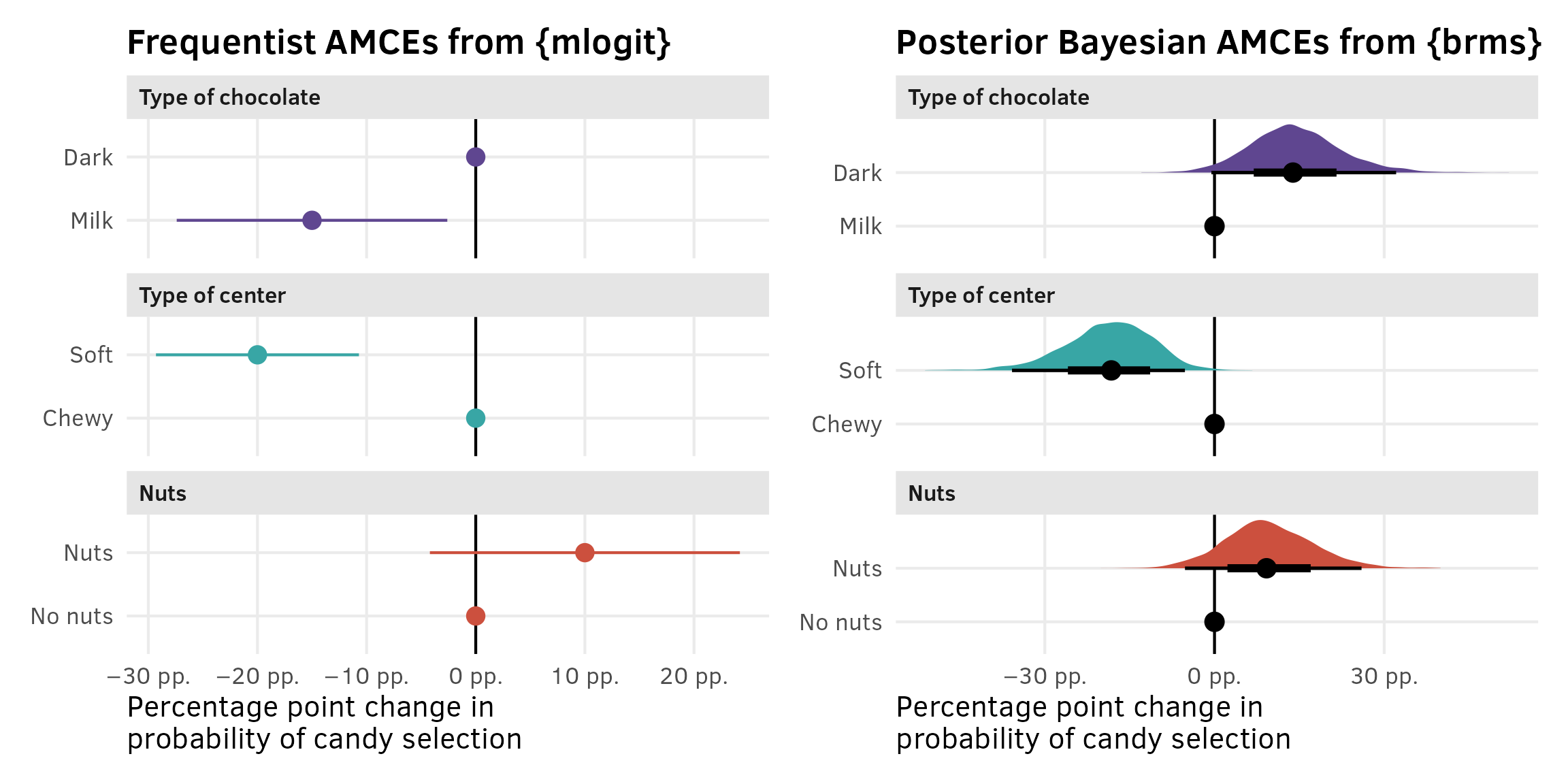

AMCEs

The marketing world doesn’t typically look at coefficients or marginal effects, but the political science world definitely does. In political science, the estimand we often care about the most is the average marginal component effect (AMCE), or the causal effect of moving one feature level to a different value, holding all other features constant. I have a whole in-depth blog post about AMCEs and how to calculate them—go look at that for more details. Long story short—AMCEs are basically the coefficients in a regression model.

Interpreting the coefficients is difficult with models that aren’t basic linear regression. Here, all these coefficients are on the log scale, so they’re not directly interpretable. The original SAS technical note also doesn’t really interpret any of these , they don’t really interpret these things anyway, since they’re more focused on predictions. All they say is this:

The parameter estimate with the smallest p-value is for soft center. Since the parameter estimate is negative, chewy is the more preferred level. Dark is preferred over milk, and nuts over no nuts, however only the p-value for Soft is less than 0.05.

We could exponentiate the coefficients to make them multiplicative (akin to odds ratios in logistic regression). For center = soft, \(e^{-2.19722}\) = 0.1111, which means that candies with a soft center are 89% less likely to be chosen than candies with a chewy center, relative to the average candy. But that’s weird to think about.

So instead we can turn to {marginaleffects} once again to calculate percentage-point scale estimands that we can interpret far more easily.

Nobody is ever consistent about the word “marginal effect.” Some people use it to refer to averages; some people use it to refer to slopes. These are complete opposites. In calculus, averages = integrals and slopes = derivatives and they’re the inverse of each other.

I like to think of marginal effects as what happens to the outcome when you move an explanatory variable a tiny bit. With continuous variables, that’s a slope; with categorical variables, that’s an offset in average outcomes. These correspond directly to how you normally interpret regression coefficients. Or returning to my favorite analogy about regression, with numeric variables we care what happens to the outcome when we slide the value up a tiny bit; with categorical variables we care about what happens to the outcome when we switch on a category.

Additionally, there are like a billion different ways to calculate marginal effects: average marginal effects (AMEs), group-average marginal effects (G-AMEs), marginal effects at user-specified values, marginal effects at the mean (MEM), and counterfactual marginal effects. See the documentation for {marginaleffects} + this mega blog post for more about these subtle differences.

Bayesian comparisons/contrasts

We can use avg_comparisons() to calculate the difference (or average marginal effect) for each of the categorical coefficients on the percentage-point scale, showing the effect of moving from milk → dark, chewy → soft, and nuts → no nuts.

(Technically we can also use avg_slopes(), even though none of these coefficients are actually slopes. {marginaleffects} is smart enough to show contrasts for categorical variables and partial derivatives/slopes for continuous variables.)

avg_comparisons(model_chocolate_brms)

##

## Term Contrast Estimate 2.5 % 97.5 %

## dark Dark - Milk 0.139 -0.00551 0.3211

## nuts Nuts - No nuts 0.092 -0.05221 0.2599

## soft Soft - Chewy -0.182 -0.35800 -0.0523

##

## Columns: term, contrast, estimate, conf.low, conf.highWhen holding all other features constant, moving from chewy → soft is associated with a posterior median 18 percentage point decrease in the probability of selection (or drop in market share if you want to think of it that way), on average.

Frequentist comparisons/contrasts

We went out of order in this section and showed how to use avg_comparisons() with the Bayesian model first instead of the frequentist model. That’s because it was easy. mlogit() models behave strangely with {marginaleffects} because {mlogit} forces its predictions to use every possible value of the alternatives A–H. Accordingly, the estimate for any coefficients in the attributes section of the {mlogit} formula (dark, soft, and nuts here) will automatically be zero. Note how here there are 24 rows of comparisons instead of 3, since we get comparisons in each of the 8 groups, and note how the estimates are all zero:

avg_comparisons(model_chocolate_mlogit)

##

## Group Term Contrast Estimate Std. Error z Pr(>|z|) 2.5 % 97.5 %

## A dark Dark - Milk -2.78e-17 7.27e-13 -3.82e-05 1 -1.42e-12 1.42e-12

## B dark Dark - Milk 0.00e+00 NA NA NA NA NA

## C dark Dark - Milk 0.00e+00 1.62e-14 0.00e+00 1 -3.17e-14 3.17e-14

## D dark Dark - Milk -6.94e-18 1.73e-13 -4.02e-05 1 -3.38e-13 3.38e-13

## E dark Dark - Milk -2.78e-17 7.27e-13 -3.82e-05 1 -1.42e-12 1.42e-12

## F dark Dark - Milk 0.00e+00 NA NA NA NA NA

## G dark Dark - Milk 0.00e+00 1.62e-14 0.00e+00 1 -3.17e-14 3.17e-14

## H dark Dark - Milk -6.94e-18 1.73e-13 -4.02e-05 1 -3.38e-13 3.38e-13

## A nuts Nuts - No nuts 1.39e-17 NA NA NA NA NA

## B nuts Nuts - No nuts 1.39e-17 NA NA NA NA NA

## C nuts Nuts - No nuts 0.00e+00 1.41e-14 0.00e+00 1 -2.77e-14 2.77e-14

## D nuts Nuts - No nuts 0.00e+00 1.41e-14 0.00e+00 1 -2.77e-14 2.77e-14

## E nuts Nuts - No nuts 0.00e+00 9.74e-13 0.00e+00 1 -1.91e-12 1.91e-12

## F nuts Nuts - No nuts 0.00e+00 9.74e-13 0.00e+00 1 -1.91e-12 1.91e-12

## G nuts Nuts - No nuts 0.00e+00 1.08e-13 0.00e+00 1 -2.11e-13 2.11e-13

## H nuts Nuts - No nuts 0.00e+00 1.08e-13 0.00e+00 1 -2.11e-13 2.11e-13

## A soft Soft - Chewy 6.94e-18 1.08e-13 6.42e-05 1 -2.12e-13 2.12e-13

## B soft Soft - Chewy 1.39e-17 2.16e-13 6.43e-05 1 -4.23e-13 4.23e-13

## C soft Soft - Chewy 6.94e-18 1.08e-13 6.42e-05 1 -2.12e-13 2.12e-13

## D soft Soft - Chewy 1.39e-17 2.16e-13 6.43e-05 1 -4.23e-13 4.23e-13

## E soft Soft - Chewy 1.39e-17 4.33e-13 3.21e-05 1 -8.48e-13 8.48e-13

## F soft Soft - Chewy 0.00e+00 4.52e-13 0.00e+00 1 -8.86e-13 8.86e-13

## G soft Soft - Chewy 1.39e-17 4.33e-13 3.21e-05 1 -8.48e-13 8.48e-13

## H soft Soft - Chewy 0.00e+00 4.52e-13 0.00e+00 1 -8.86e-13 8.86e-13

##

## Columns: group, term, contrast, estimate, std.error, statistic, p.value, conf.low, conf.highIf we had continuous variables, we could work around this by specifying our own tiny amount of marginal change to compare across, but we’re working with categories and can’t do that. Instead, with categorical variables, we can return to predictions() and define custom aggregations of different features and levels.

Before making custom aggregations, though, it’ll be helpful to illustrate what exactly we’re looking at when collapsing these results. Remember that earlier we calculated predictions for all the unique combinations of dark, soft, and nuts:

preds_chocolate_mlogit <- predictions(

model_chocolate_mlogit,

newdata = datagrid(dark = unique, soft = unique, nuts = unique)

)

preds_chocolate_mlogit %>%

as_tibble() %>%

select(group, dark, soft, nuts, estimate)

## # A tibble: 8 × 5

## group dark soft nuts estimate

## <chr> <fct> <fct> <fct> <dbl>

## 1 A Milk Chewy No nuts 0.0540

## 2 B Milk Chewy Nuts 0.126

## 3 C Milk Soft No nuts 0.00600

## 4 D Milk Soft Nuts 0.0140

## 5 E Dark Chewy No nuts 0.216

## 6 F Dark Chewy Nuts 0.504

## 7 G Dark Soft No nuts 0.0240

## 8 H Dark Soft Nuts 0.0560Four of the groups have dark = Milk and four have dark = Dark, with other varying characteristics across those groups (chewy/soft, nuts/no nuts). If we want the average proportion of all milk and dark chocolate options, we can group and summarize:

The average market share for milk chocolate candies, holding all other features constant, is 5% (\(\frac{0.0540 + 0.126 + 0.006 + 0.014}{2} = 0.05\)); the average market share for dark chocolate candies is 20% (\(\frac{0.216 + 0.504 + 0.024 + 0.056}{2} = 0.2\)). These values are the averages of the predictions from the four groups where dark is either Milk or Dark.

Instead of calculating these averages manually (which would also force us to calculate standard errors and p-values manually, which, ugh), we can calculate these aggregate group means with predictions(). To do this, we can feed a little data frame to predictions() with the by argument. The data frame needs to contain columns for the features we want to collapse, and a by column with the labels we want to include in the output. For example, if we want to collapse the eight possible choices into those with milk chocolate and those with dark chocolate, we could create a by data frame like this:

by <- data.frame(dark = c("Milk", "Dark"), by = c("Milk", "Dark"))

by

## dark by

## 1 Milk Milk

## 2 Dark DarkIf we use that by data frame in predictions(), we get the same 5% and 20% from before, but now with all of {marginaleffects}’s extra features like standard errors and confidence intervals:

predictions(

model_chocolate_mlogit,

by = by

)

##

## Estimate Std. Error z Pr(>|z|) 2.5 % 97.5 % By

## 0.20 0.0316 6.32 <0.001 0.138 0.262 Dark

## 0.05 0.0316 1.58 0.114 -0.012 0.112 Milk

##

## Columns: estimate, std.error, statistic, p.value, conf.low, conf.high, byEven better, we can use the hypothesis functionality of predictions() to conduct a hypothesis test and calculate the difference (or contrast) between these two averages, which is exactly what we’re looking for with categorical AMCEs. This shows the average causal effect of moving from milk → dark—holding all other features constant, switching the chocolate type from milk to dark causes a 15 percentage point increase in the probability of selecting the candy, on average.

predictions(

model_chocolate_mlogit,

by = by,

hypothesis = "revpairwise"

)

##

## Term Estimate Std. Error z Pr(>|z|) 2.5 % 97.5 %

## Milk - Dark -0.15 0.0632 -2.37 0.0177 -0.274 -0.026

##

## Columns: term, estimate, std.error, statistic, p.value, conf.low, conf.highWe can’t simultaneously specify all the contrasts we’re interested in single by argument, but we can do them separately and combine them into a single data frame:

amces_chocolate_mlogit <- bind_rows(

dark = predictions(

model_chocolate_mlogit,

by = data.frame(dark = c("Milk", "Dark"), by = c("Milk", "Dark")),

hypothesis = "revpairwise"

),

soft = predictions(

model_chocolate_mlogit,

by = data.frame(soft = c("Chewy", "Soft"), by = c("Chewy", "Soft")),

hypothesis = "revpairwise"

),

nuts = predictions(

model_chocolate_mlogit,

by = data.frame(nuts = c("No nuts", "Nuts"), by = c("No nuts", "Nuts")),

hypothesis = "revpairwise"

),

.id = "variable"

)

amces_chocolate_mlogit

##

## Term Estimate Std. Error z Pr(>|z|) 2.5 % 97.5 %

## Milk - Dark -0.15 0.0632 -2.37 0.0177 -0.274 -0.026

## Soft - Chewy -0.20 0.0474 -4.22 <0.001 -0.293 -0.107

## Nuts - No nuts 0.10 0.0725 1.38 0.1675 -0.042 0.242

##

## Columns: variable, term, estimate, std.error, statistic, p.value, conf.low, conf.highPlots

Plotting these AMCEs requires a bit of data wrangling, but we get really neat plots, so it’s worth it. I’ve hidden all the code here for the sake of space.

Extract variable labels

chocolate_var_levels <- tibble(

variable = c("dark", "soft", "nuts")

) %>%

mutate(levels = map(variable, ~{

x <- chocolate[[.x]]

if (is.numeric(x)) {

""

} else if (is.factor(x)) {

levels(x)

} else {

sort(unique(x))

}

})) %>%

unnest(levels) %>%

mutate(term = paste0(variable, levels))

# Make a little lookup table for nicer feature labels

chocolate_var_lookup <- tribble(

~variable, ~variable_nice,

"dark", "Type of chocolate",

"soft", "Type of center",

"nuts", "Nuts"

) %>%

mutate(variable_nice = fct_inorder(variable_nice))Combine full dataset of factor levels with model comparisons and make {mlogit} plot

amces_chocolate_mlogit_split <- amces_chocolate_mlogit %>%

separate_wider_delim(

term,

delim = " - ",

names = c("variable_level", "reference_level")

) %>%

rename(term = variable)

plot_data <- chocolate_var_levels %>%

left_join(

amces_chocolate_mlogit_split,

by = join_by(variable == term, levels == variable_level)

) %>%

# Make these zero

mutate(

across(

c(estimate, conf.low, conf.high),

~ ifelse(is.na(.x), 0, .x)

)

) %>%

left_join(chocolate_var_lookup, by = join_by(variable)) %>%

mutate(across(c(levels, variable_nice), ~fct_inorder(.)))

p1 <- ggplot(

plot_data,

aes(x = estimate, y = levels, color = variable_nice)

) +

geom_vline(xintercept = 0) +

geom_pointrange(aes(xmin = conf.low, xmax = conf.high)) +

scale_x_continuous(labels = label_pp) +

scale_color_manual(values = clrs[c(1, 3, 8)]) +

guides(color = "none") +

labs(

x = "Percentage point change in\nprobability of candy selection",

y = NULL,

title = "Frequentist AMCEs from {mlogit}"

) +

facet_col(facets = "variable_nice", scales = "free_y", space = "free")Combine full dataset of factor levels with posterior draws and make {brms} plot

# This is much easier than the mlogit mess because we can use avg_comparisons() directly

posterior_mfx <- model_chocolate_brms %>%

avg_comparisons() %>%

posteriordraws()

posterior_mfx_nested <- posterior_mfx %>%

separate_wider_delim(

contrast,

delim = " - ",

names = c("variable_level", "reference_level")

) %>%

group_by(term, variable_level) %>%

nest()

# Combine full dataset of factor levels with model results

plot_data_bayes <- chocolate_var_levels %>%

left_join(

posterior_mfx_nested,

by = join_by(variable == term, levels == variable_level)

) %>%

mutate(data = map_if(data, is.null, ~ tibble(draw = 0, estimate = 0))) %>%

unnest(data) %>%

left_join(chocolate_var_lookup, by = join_by(variable)) %>%

mutate(across(c(levels, variable_nice), ~fct_inorder(.)))

p2 <- ggplot(plot_data_bayes, aes(x = draw, y = levels, fill = variable_nice)) +

geom_vline(xintercept = 0) +

stat_halfeye(normalize = "groups") + # Make the heights of the distributions equal within each facet

guides(fill = "none") +

facet_col(facets = "variable_nice", scales = "free_y", space = "free") +

scale_x_continuous(labels = label_pp) +

scale_fill_manual(values = clrs[c(1, 3, 8)]) +

labs(

x = "Percentage point change in\nprobability of candy selection",

y = NULL,

title = "Posterior Bayesian AMCEs from {brms}"

)p1 | p2

Part 2: Minivans; repeated questions; basic multinomial logit

The setup

In this experiment, respondents are asked to choose which of these minivans they’d want to buy, based on four different features/attributes with different levels:

| Features/Attributes | Levels |

|---|---|

| Passengers | 6, 7, 8 |

| Cargo area | 2 feet, 3 feet |

| Engine | Gas, electric, hybrid |

| Price | $30,000; $35,000; $40,000 |

Respondents see this a question similar to this fifteen different times, with three options with randomly shuffled levels for each of the features.

| Option 1 | Option 2 | Option 3 | |

|---|---|---|---|

| Passengers | 7 | 8 | 6 |

| Cargo area | 3 feet | 3 feet | 2 feet |

| Engine | Electric | Gas | Hybrid |

| Price | $40,000 | $40,000 | $30,000 |

| Choice |

The data

The data for this kind of experiment has one row for each possible alternative (alt) within each set of 15 questions (ques), thus creating 3 × 15 = 45 rows per respondent (resp.id). There were 200 respondents, with 45 rows each, so there are 200 × 45 = 9,000 rows. Here, Respondent 1 chose a $30,000 gas van with 6 seats and 3 feet of cargo space in the first set of three options, a $35,000 gas van with 7 seats and 3 feet of cargo space in the second set of three options, and so on.

There’s also a column here for carpool indicating if the respondent carpools with others when commuting. It’s an individual respondent-level characteristic and is constant throughout all the questions and alternatives, and we’ll use it later.

minivans

## # A tibble: 9,000 × 9

## resp.id ques alt carpool seat cargo eng price choice

## <dbl> <dbl> <dbl> <fct> <fct> <fct> <fct> <fct> <dbl>

## 1 1 1 1 yes 6 2ft gas 35 0

## 2 1 1 2 yes 8 3ft hyb 30 0

## 3 1 1 3 yes 6 3ft gas 30 1

## 4 1 2 1 yes 6 2ft gas 30 0

## 5 1 2 2 yes 7 3ft gas 35 1

## 6 1 2 3 yes 6 2ft elec 35 0

## 7 1 3 1 yes 8 3ft gas 35 1

## 8 1 3 2 yes 7 3ft elec 30 0

## 9 1 3 3 yes 8 2ft elec 40 0

## 10 1 4 1 yes 7 3ft elec 40 1

## # ℹ 8,990 more rowsThe model

Respondents were shown three different options and asked to select one. We thus have three possible outcomes: a respondent could have selected option 1, option 2, or option 3. Because everything was randomized, there shouldn’t be any patterns in which options people choose—we don’t want to see that the first column is more common, since that would indicate that respondents are just repeatedly selecting the first column to get through the survey. Since there are three possible outcomes (option 1, 2, and 3), we’ll use multinomial logistic regression.

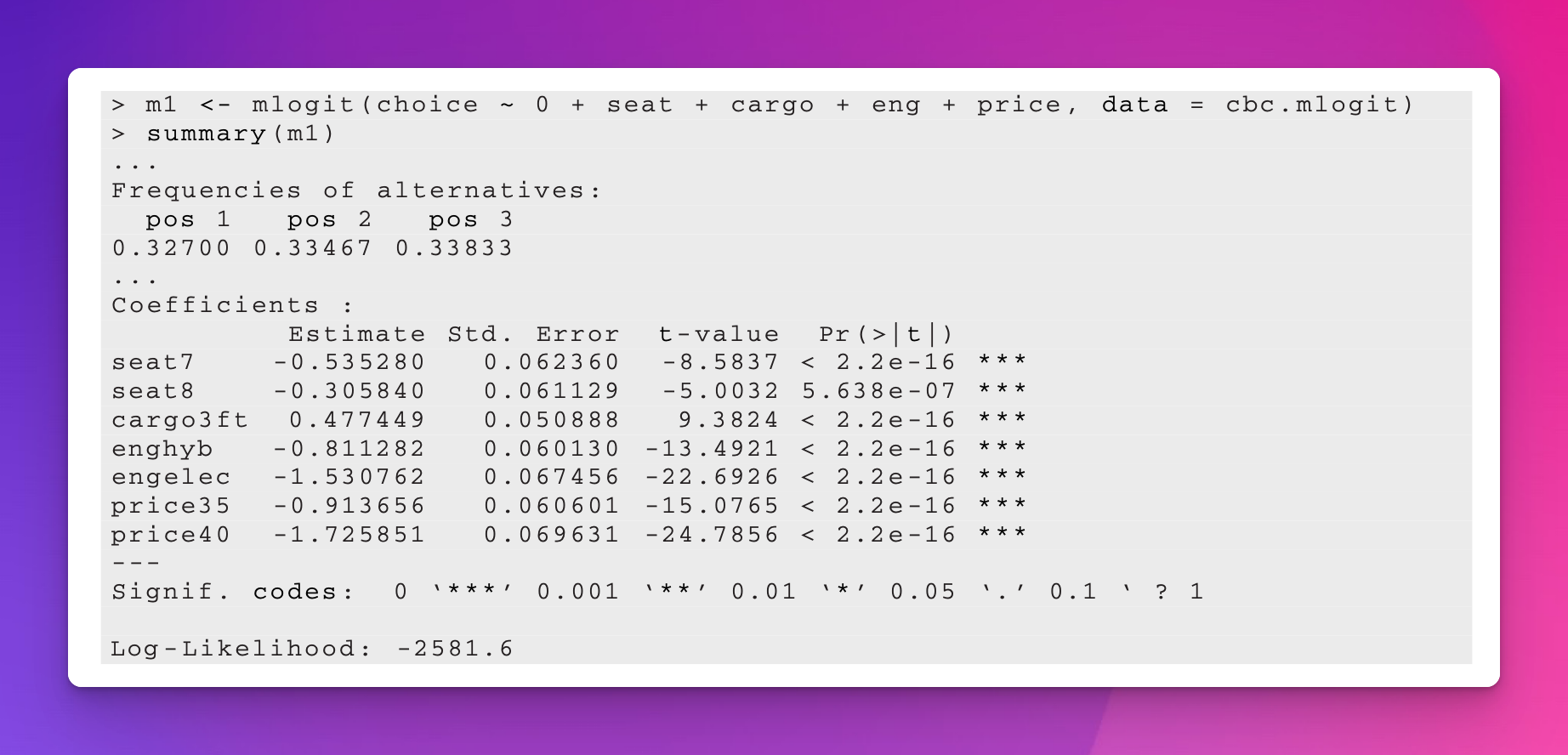

Original model as a baseline

In the example in their textbook, Chapman and Feit (2019) use {mlogit} to estimate this model and they find these results. This will be our baseline throughout this example.

mlogit model

This data is a little more complex now, since there are alternatives nested inside questions inside respondents. To account for this panel structure when using {mlogit}, we need to define two index columns: one for the unique set of alternatives offered to the respondent and one for the respondent ID. We still do this with dfidx(), but need to create a new column with an ID number for each unique combination of respondent ID and question number:

Now we can fit the model. Note the 0 ~ seat syntax here. That suppresses the intercept for the model, which behaves weirdly with multinomial models. Since there are three categories for the outcome (options 1, 2, and 3), there are two intercepts, representing cutpoints-from-ordered-logit-esque shifts in the probability of selecting option 1 vs. option 2 and option 2 vs. option 3. We don’t want to deal with those, so we’ll suppress them.

model_minivans_mlogit <- mlogit(

choice ~ 0 + seat + cargo + eng + price | 0 | 0,

data = minivans_idx

)

model_parameters(model_minivans_mlogit, digits = 4, p_digits = 4)

## Parameter | Log-Odds | SE | 95% CI | z | p

## -------------------------------------------------------------------------

## seat [7] | -0.5353 | 0.0624 | [-0.66, -0.41] | -8.5837 | 9.1863e-18

## seat [8] | -0.3058 | 0.0611 | [-0.43, -0.19] | -5.0032 | 5.6376e-07

## cargo [3ft] | 0.4774 | 0.0509 | [ 0.38, 0.58] | 9.3824 | 6.4514e-21

## eng [hyb] | -0.8113 | 0.0601 | [-0.93, -0.69] | -13.4921 | 1.7408e-41

## eng [elec] | -1.5308 | 0.0675 | [-1.66, -1.40] | -22.6926 | 5.3004e-114

## price [35] | -0.9137 | 0.0606 | [-1.03, -0.79] | -15.0765 | 2.3123e-51

## price [40] | -1.7259 | 0.0696 | [-1.86, -1.59] | -24.7856 | 1.2829e-135These are the same results from p. 371 in Chapman and Feit (2019), so it worked. Again, the marketing world doesn’t typically do much with these coefficients beyond looking at their direction and magnitude. For instance, in Chapman and Feit (2019) they say that the estimate for seat [7] here is negative, which means that a 7-seat option is less preferred than 6-seat option, and that the estimate for price [40] is more negative than the already-negative estimate for price [35], which means that (1) respondents don’t like the $35,000 option compared to the baseline $30,000 and that (2) respondents really don’t like the $40,000 option. We could theoretically exponentiate these things—like, seeing 7 seats makes it \(e^{-0.5353}\) = 0.5855 = 41% less likely to select the option compared to 6 seats—but again, that’s weird.

Bayesian model with {brms}

We can also fit this multinomial model in a Bayesian way using {brms}. Stan has a categorical family for dealing with mulitnomial/categorical outcomes. But first, we’ll look at the nested structure of this data and incorporate that into the model, since we won’t be using the weird {mlogit}-style indexed data frame. As with the chocolate experiment, the data has a natural hierarchy in it, with three questions nested inside 15 separate question sets, nested inside each of the 200 respondents.

Currently, our main outcome variable choice is binary. If we run the model with choice as the outcome with a categorical family, the model will fit, but it will go slow and {brms} will complain about it and recommend switching to regular logistic regression. The categorical family in Stan requires 2+ outcomes and a reference category. Here we have three possible options (1, 2, and 3), and we can imagine a reference category of 0 for rows that weren’t selected.

We can create a new outcome column (choice_alt) that indicates which option each respondent selected: 0 if they didn’t choose the option and 1–3 if they chose the first, second, or third option. Because of how the data is recorded, this only requires multiplying alt and choice:

minivans_choice_alt <- minivans %>%

mutate(choice_alt = factor(alt * choice))

minivans_choice_alt %>%

select(resp.id, ques, alt, seat, cargo, eng, price, choice, choice_alt)

## # A tibble: 9,000 × 9

## resp.id ques alt seat cargo eng price choice choice_alt

## <dbl> <dbl> <dbl> <fct> <fct> <fct> <fct> <dbl> <fct>

## 1 1 1 1 6 2ft gas 35 0 0

## 2 1 1 2 8 3ft hyb 30 0 0

## 3 1 1 3 6 3ft gas 30 1 3

## 4 1 2 1 6 2ft gas 30 0 0

## 5 1 2 2 7 3ft gas 35 1 2

## 6 1 2 3 6 2ft elec 35 0 0

## 7 1 3 1 8 3ft gas 35 1 1

## 8 1 3 2 7 3ft elec 30 0 0

## 9 1 3 3 8 2ft elec 40 0 0

## 10 1 4 1 7 3ft elec 40 1 1

## # ℹ 8,990 more rowsWe can now use the new four-category choice_alt column as our outcome with the categorical() family.

If we realllly wanted, we could add random effects for question sets nested inside respondents, like (1 | resp.id / ques). We’d want to do that if there were set-specific things that could influences choices. Like maybe we want to account for the possibility that everyone’s just choosing the first option, so it behaves differently? Or maybe the 5th set of questions is set to an extra difficult level on a quiz or something? Or maybe we have so many sets that we think the later ones will be less accurate because of respondent fatigue? idk. In this case, question set-specific effects don’t matter at all. Each question set is equally randomized and no different from the others, so we won’t bother modeling that layer of the hierarchy.

We want to model the choice of option 1, 2, or 3 (choice_alt) based on minivan characteristics (seat, cargo, eng, price). With the categorical model, we actually get a set of parameters to estimate the probability of selecting each of the options, which Stan calls \(\mu\), so we have a set of three probabilities: \(\{\mu_1, \mu_2, \mu_3\}\). We’ll use the subscript \(i\) to refer to individual minivan choices and \(j\) to refer to respondents. Here’s the fun formal model:

\[ \begin{aligned} &\ \textbf{Multinomial probability of selection of choice}_i \textbf{ in respondent}_j \\ \text{Choice}_{i_j} \sim&\ \operatorname{Categorical}(\{\mu_{1,i_j}, \mu_{2,i_j}, \mu_{3,i_j}\}) \\[10pt] &\ \textbf{Model for probability of each option} \\ \{\mu_{1,i_j}, \mu_{2,i_j}, \mu_{3,i_j}\} =&\ (\beta_0 + b_{0_j}) + \beta_1 \text{Seat[7]}_{i_j} + \beta_2 \text{Seat[8]}_{i_j} + \beta_3 \text{Cargo[3ft]}_{i_j} + \\ &\ \beta_4 \text{Engine[hyb]}_{i_j} + \beta_5 \text{Engine[elec]}_{i_j} + \beta_6 \text{Price[35k]}_{i_j} + \beta_7 \text{Price[40k]}_{i_j} \\[5pt] b_{0_j} \sim&\ \mathcal{N}(0, \sigma_0) \qquad\quad\quad \text{Respondent-specific offsets from global probability} \\[10pt] &\ \textbf{Priors} \\ \beta_{0 \dots 7} \sim&\ \mathcal{N} (0, 3) \qquad\qquad\ \ \text{Prior for choice-level coefficients} \\ \sigma_0 \sim&\ \operatorname{Exponential}(1) \quad \text{Prior for between-respondent variability} \end{aligned} \]

And here’s the {brms} model. Notice the much-more-verbose prior section—because the categorical family in Stan estimates separate parameters for each of the categories (\(\{\mu_1, \mu_2, \mu_3\}\)), we have a mean and standard deviation for the probability of selecting each of those options. We need to specify each of these separately too instead of just doing something like prior(normal(0, 3), class = b). Also notice the refcat argument in categorical()—this makes it so that all the estimates are relative to not choosing an option (or when choice_alt is 0). And also notice the slightly different syntax for the random respondent intercepts: (1 | ID | resp.id). That new middle ID is special {brms} formula syntax that we can use when working with categorical or ordinal families, and it makes it so that the group-level effects for the different outcomes (here options 0, 1, 2, and 3) are correlated (see p. 4 of this {brms} vignette for more about this special syntax).

model_minivans_categorical_brms <- brm(

bf(choice_alt ~ 0 + seat + cargo + eng + price + (1 | ID | resp.id)),

data = minivans_choice_alt,

family = categorical(refcat = "0"),

prior = c(

prior(normal(0, 3), class = b, dpar = mu1),

prior(normal(0, 3), class = b, dpar = mu2),

prior(normal(0, 3), class = b, dpar = mu3),

prior(exponential(1), class = sd, dpar = mu1),

prior(exponential(1), class = sd, dpar = mu2),

prior(exponential(1), class = sd, dpar = mu3)

),

chains = 4, cores = 4, iter = 2000, seed = 1234,

backend = "cmdstanr", threads = threading(2), refresh = 0,

file = "models/model_minivans_categorical_brms"

)This model gives us a ton of parameters! We get three estimates per feature level (i.e. mu1_cargo3ft, mu2_cargo3ft, and mu3_cargo3ft for the cargo3ft effect), since we’re actually estimating the effect of each covariate on the probability of selecting each of the three options.

model_parameters(model_minivans_categorical_brms)

## Parameter | Median | 95% CI | pd | Rhat | ESS

## -----------------------------------------------------------------

## mu1_seat6 | -0.34 | [-0.52, -0.16] | 99.95% | 1.001 | 2673.00

## mu1_seat7 | -0.86 | [-1.04, -0.67] | 100% | 1.000 | 3167.00

## mu1_seat8 | -0.59 | [-0.77, -0.41] | 100% | 1.000 | 3373.00

## mu1_cargo3ft | 0.46 | [ 0.32, 0.60] | 100% | 1.000 | 6314.00

## mu1_enghyb | -0.76 | [-0.92, -0.60] | 100% | 1.001 | 4628.00

## mu1_engelec | -1.51 | [-1.69, -1.33] | 100% | 0.999 | 4913.00

## mu1_price35 | -0.82 | [-0.99, -0.67] | 100% | 0.999 | 4574.00

## mu1_price40 | -1.74 | [-1.94, -1.56] | 100% | 1.000 | 4637.00

## mu2_seat6 | -0.39 | [-0.57, -0.20] | 100% | 1.000 | 2387.00

## mu2_seat7 | -0.95 | [-1.15, -0.77] | 100% | 1.001 | 2470.00

## mu2_seat8 | -0.67 | [-0.85, -0.49] | 100% | 1.001 | 2489.00

## mu2_cargo3ft | 0.49 | [ 0.35, 0.63] | 100% | 1.000 | 4836.00

## mu2_enghyb | -0.79 | [-0.95, -0.63] | 100% | 1.000 | 4421.00

## mu2_engelec | -1.40 | [-1.57, -1.22] | 100% | 1.000 | 4261.00

## mu2_price35 | -0.79 | [-0.95, -0.63] | 100% | 1.001 | 3699.00

## mu2_price40 | -1.47 | [-1.65, -1.29] | 100% | 0.999 | 3978.00

## mu3_seat6 | -0.28 | [-0.46, -0.11] | 99.85% | 1.000 | 2077.00

## mu3_seat7 | -0.78 | [-0.96, -0.60] | 100% | 1.000 | 3025.00

## mu3_seat8 | -0.63 | [-0.81, -0.46] | 100% | 1.000 | 2483.00

## mu3_cargo3ft | 0.36 | [ 0.23, 0.50] | 100% | 0.999 | 5327.00

## mu3_enghyb | -0.73 | [-0.88, -0.58] | 100% | 1.000 | 4039.00

## mu3_engelec | -1.41 | [-1.59, -1.23] | 100% | 1.001 | 3818.00

## mu3_price35 | -0.85 | [-1.01, -0.69] | 100% | 1.000 | 4315.00

## mu3_price40 | -1.56 | [-1.75, -1.39] | 100% | 0.999 | 4774.00Importantly, the estimates here are all roughly equivalent to what we get from {mlogit}: the {mlogit} estimate for cargo3ft was 0.4775, while the three median posterior {brms} estimates are 0.46 (95% credible interval: 0.32–0.60), 0.49 (0.35–0.63), and 0.36 (0.23–0.50)

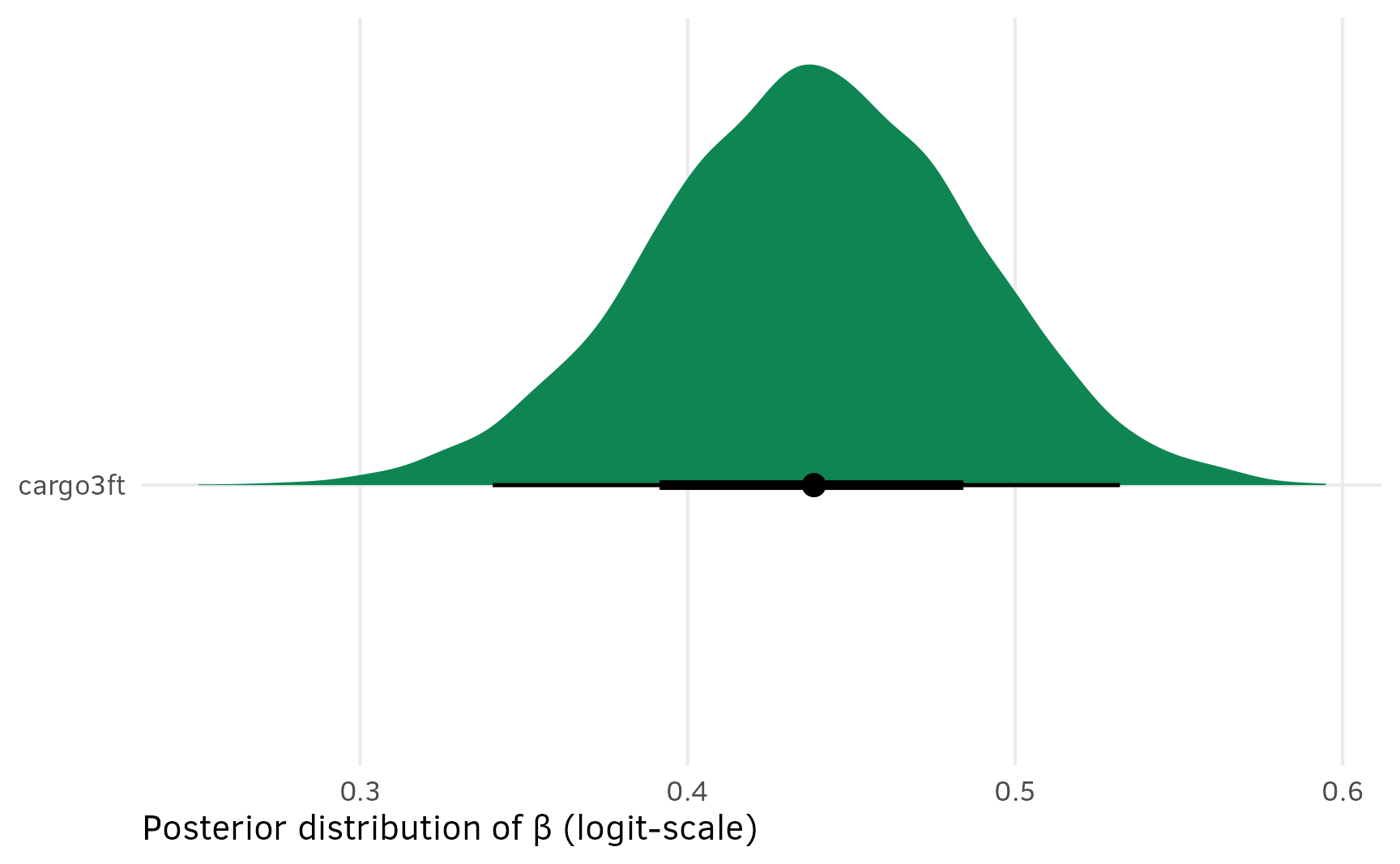

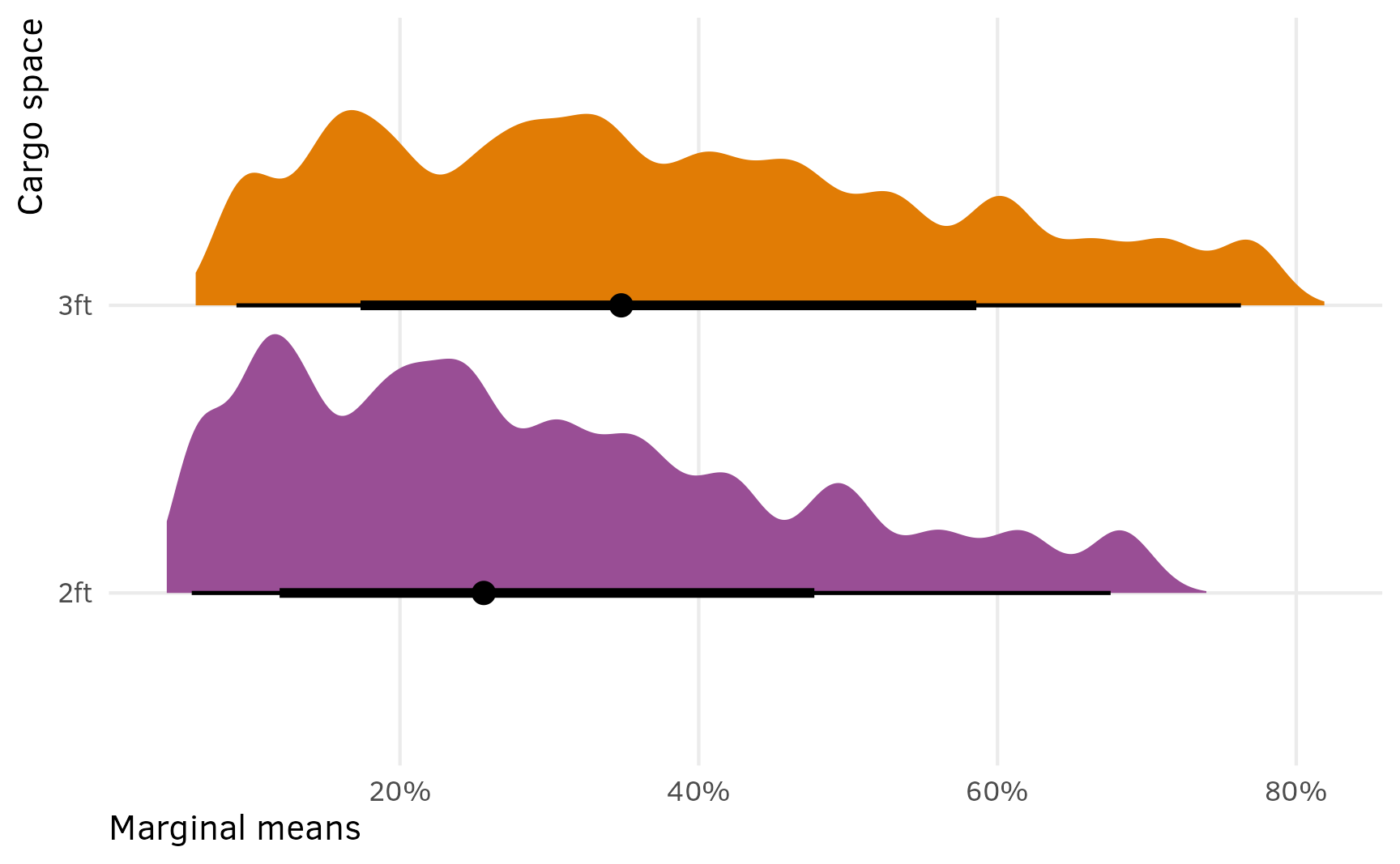

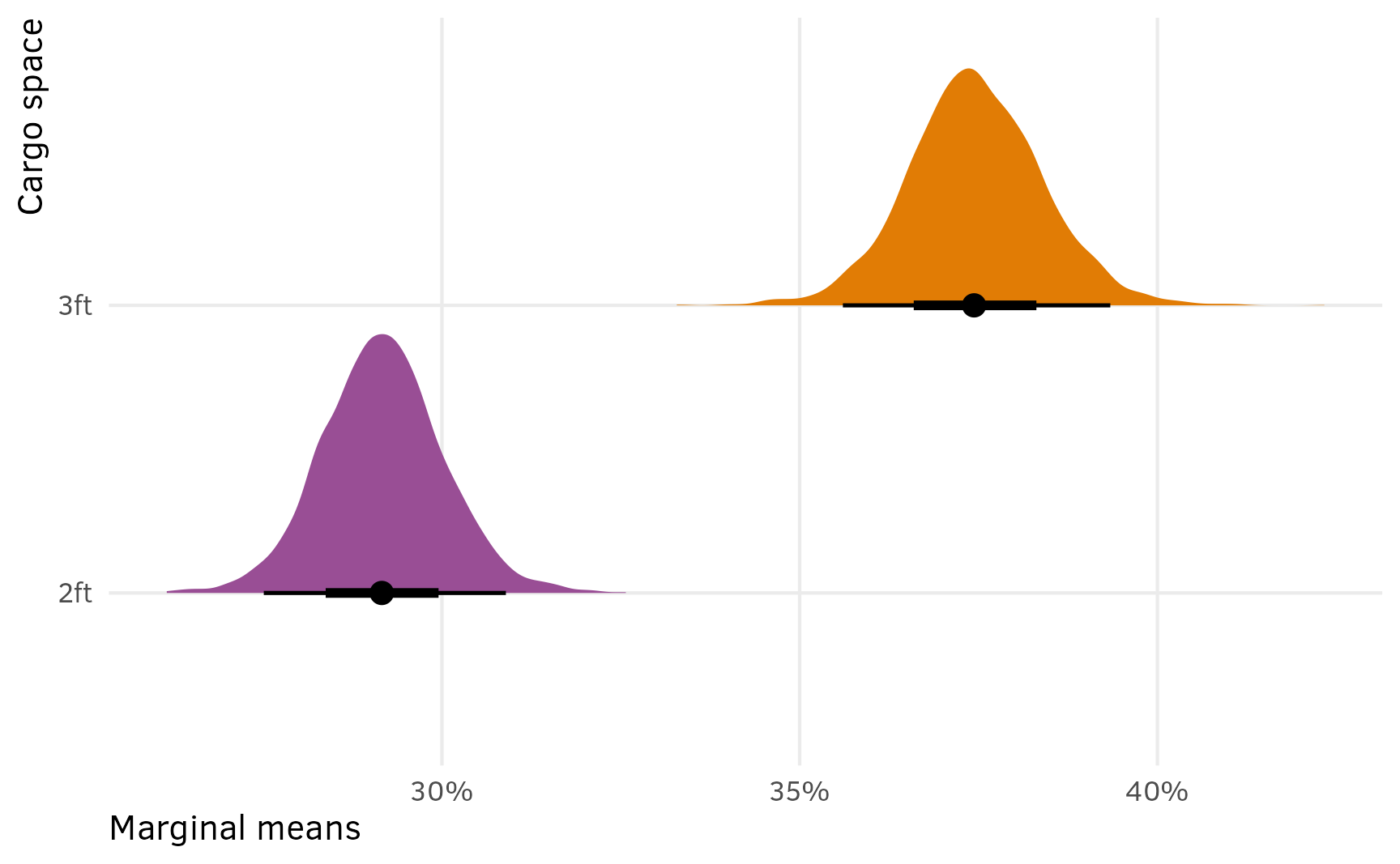

Since all the features are randomly shuffled between the three options each time, and each option is selected 1/3rd of the time, it’s probably maybe legal to pool these posterior estimates together (maaaaybeee???) so that we don’t have to work with three separate estimates for each parameter? To do this we’ll take the average of each of the three \(\mu\) estimates within each draw, which is also called “marginalizing” across the three options.

Here’s how we’d do that with {tidybayes}. The medians are all roughly the same now!

minivans_cat_marginalized <- model_minivans_categorical_brms %>%

gather_draws(`^b_.*$`, regex = TRUE) %>%

# Each variable name has "mu1", "mu2", etc. built in, like "b_mu1_seat6". This

# splits the .variable column into two parts based on a regular expression,

# creating one column for the mu part ("b_mu1_") and one for the rest of the

# variable name ("seat6")

separate_wider_regex(

.variable,

patterns = c(mu = "b_mu\\d_", .variable = ".*")

) %>%

# Find the average of the three mu estimates for each variable within each

# draw, or marginalize across the three options, since they're randomized

group_by(.variable, .draw) %>%

summarize(.value = mean(.value))

minivans_cat_marginalized %>%

group_by(.variable) %>%

median_qi()

## # A tibble: 8 × 7

## .variable .value .lower .upper .width .point .interval

## <chr> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 cargo3ft 0.439 0.340 0.532 0.95 median qi

## 2 engelec -1.44 -1.56 -1.32 0.95 median qi

## 3 enghyb -0.762 -0.871 -0.651 0.95 median qi

## 4 price35 -0.823 -0.935 -0.713 0.95 median qi

## 5 price40 -1.59 -1.72 -1.47 0.95 median qi

## 6 seat6 -0.337 -0.464 -0.208 0.95 median qi

## 7 seat7 -0.862 -0.994 -0.734 0.95 median qi

## 8 seat8 -0.629 -0.753 -0.503 0.95 median qiAnd for fun, here’s what the posterior for new combined/collapsed/marginalized cargo3ft looks like. Great.

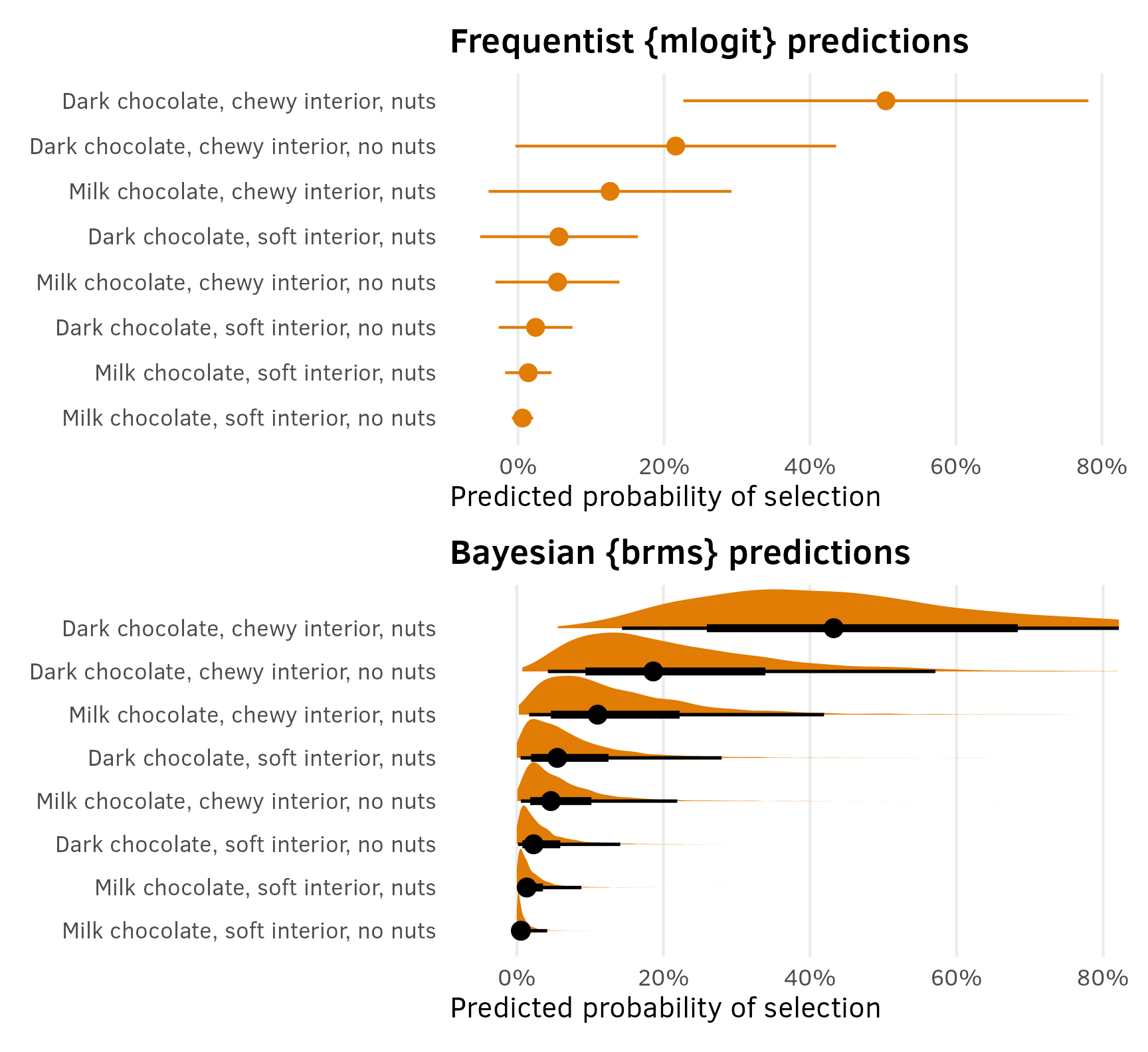

Predictions

As we saw in the first example with chocolates, the marketing world typically uses predictions from these kinds of models to estimate the predicted market share for products with different constellations of features. That was a pretty straightforward task with the chocolate model since respondents were shown all 8 options simultaneously. It’s a lot trickier with the minivan example where respondents were shown 15 sets of 3 options. Dealing with multinomial predictions is a bear of a task because these models are a lot more complex.

Frequentist predictions

With the chocolate model, we could just use avg_predictions(model_chocolate_mlogit) and automatically get predictions for all 8 options. That’s not the case here:

avg_predictions(model_minivans_mlogit)

##

## Group Estimate Std. Error z Pr(>|z|) 2.5 % 97.5 %

## 1 0.333 0.000349 955 <0.001 0.332 0.334

## 2 0.333 0.000145 2291 <0.001 0.333 0.334

## 3 0.334 0.000376 889 <0.001 0.333 0.335

##

## Columns: group, estimate, std.error, statistic, p.value, conf.low, conf.highWe get three predictions, and they’re all 33ish%. That’s because respondents were presented with three randomly shuffled options and chose one of them. All these predictions tell us is that across all 15 iterations of the questions, 1/3 of respondents selected the first option, 1/3 the second, and 1/3 the third. That’s a good sign in this case—there’s no evidence that people were just repeatedly choosing the first option. But in the end, these predictions aren’t super useful.

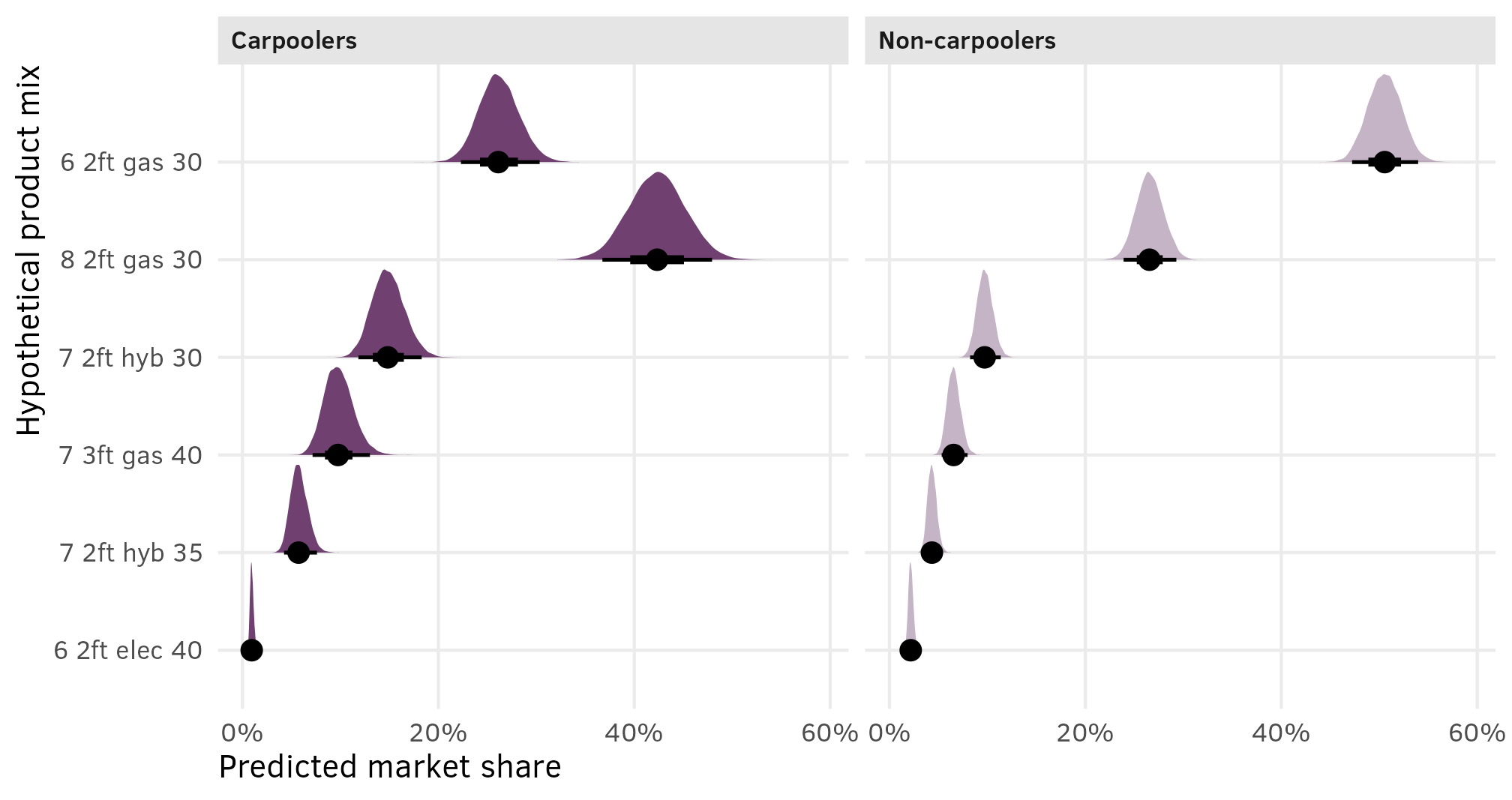

We instead want to be able to get predicted market shares (or predicted probabilities) for any given mix of products. For instance, here are six hypothetical products with different combinations of seats, cargo space, engines, and prices:

example_product_mix <- tribble(

~seat, ~cargo, ~eng, ~price,

"7", "2ft", "hyb", "30",

"6", "2ft", "gas", "30",

"8", "2ft", "gas", "30",

"7", "3ft", "gas", "40",

"6", "2ft", "elec", "40",

"7", "2ft", "hyb", "35"

) %>%

mutate(across(everything(), factor)) %>%

mutate(eng = factor(eng, levels = levels(minivans$eng)))

example_product_mix

## # A tibble: 6 × 4

## seat cargo eng price

## <fct> <fct> <fct> <fct>

## 1 7 2ft hyb 30

## 2 6 2ft gas 30

## 3 8 2ft gas 30

## 4 7 3ft gas 40

## 5 6 2ft elec 40

## 6 7 2ft hyb 35If we were working with any other type of model, we could plug this data into the newdata argument of predictions() and get predicted values. That doesn’t work here though. There were 200 respondents in the original data, and {mlogit}-predictions need to happen on a dataset with a multiple of that many rows. We can’t just feed it 6 values.

predictions(model_minivans_mlogit, newdata = example_product_mix)

## Error: The `newdata` argument for `mlogit` models must be a data frame with a number of rows equal to a multiple of the number of choices: 200.Instead, following Chapman and Feit (2019) (and this Stan forum post), we can manually multiply the covariates in example_product_mix with the model coefficients to calculate “utility” (or predicted vales on the logit scale), which we can then exponentiate and divide to calculate market shares.

From what I can tell, this is not possible with {marginaleffects} because that package can’t work with the coefficients from {mlogit} models and can only really work with predictions. {mlogit} predictions are forced to be on the response/probability scale since they’re, like, predictions, so there’s no way to get them on the log/logit link scale to calculate utilities and shares.

# Create a matrix of 0s and 1s for the values in `example_product_mix`, omitting

# the first column (seat6)

example_product_dummy_encoded <- model.matrix(

update(model_minivans_mlogit$formula, 0 ~ .),

data = example_product_mix

)[, -1]

example_product_dummy_encoded

## seat7 seat8 cargo3ft enghyb engelec price35 price40

## 1 1 0 0 1 0 0 0

## 2 0 0 0 0 0 0 0

## 3 0 1 0 0 0 0 0

## 4 1 0 1 0 0 0 1

## 5 0 0 0 0 1 0 1

## 6 1 0 0 1 0 1 0

# Matrix multiply the matrix of 0s and 1s with the model coefficients to get

# logit-scale predictions, or utility

utility <- example_product_dummy_encoded %*% coef(model_minivans_mlogit)

# Divide each exponentiated utility by the sum of the exponentiated utilities to

# get the market share

share <- exp(utility) / sum(exp(utility))

# Stick all of these in one final dataset

bind_cols(share = share, logits = utility, example_product_mix)

## # A tibble: 6 × 6

## share[,1] logits[,1] seat cargo eng price

## <dbl> <dbl> <fct> <fct> <fct> <fct>

## 1 0.113 -1.35 7 2ft hyb 30

## 2 0.433 0 6 2ft gas 30

## 3 0.319 -0.306 8 2ft gas 30

## 4 0.0728 -1.78 7 3ft gas 40

## 5 0.0167 -3.26 6 2ft elec 40

## 6 0.0452 -2.26 7 2ft hyb 35On p. 375 of Chapman and Feit (2019) (and at this Stan forum post), there’s a function called predict.mnl() that does this utility and share calculation automatically. Because this post is more didactic and because I’m more interested in the Bayesian approach, I didn’t use it earlier, but it works well.

predict.mnl <- function(model, data) {

# Function for predicting shares from a multinomial logit model

# model: mlogit object returned by mlogit()

# data: a data frame containing the set of designs for which you want to

# predict shares. Same format at the data used to estimate model.

data.model <- model.matrix(update(model$formula, 0 ~ .), data = data)[ , -1]

utility <- data.model %*% model$coef

share <- exp(utility) / sum(exp(utility))

cbind(share, data)

}

predict.mnl(model_minivans_mlogit, example_product_mix)

## share seat cargo eng price

## 1 0.11273 7 2ft hyb 30

## 2 0.43337 6 2ft gas 30

## 3 0.31918 8 2ft gas 30

## 4 0.07281 7 3ft gas 40

## 5 0.01669 6 2ft elec 40

## 6 0.04521 7 2ft hyb 35This new predicted share column sums to one, and it shows us the predicted market share assuming these are the only six products available. The $30,000 six-seater 2ft gas van and the $30,000 eight-seater 2ft gas van would comprise more than 75% (0.43337 + 0.31918) of a market consisting of these six products. Because we did this calculation by hand, we lose all of {marginaleffects}’s extra features like standard errors and hypothesis tests. Alas.

Bayesian predictions

If we use the categorical multinomial {brms} model we run into the same issue of getting weird predictions. Here it shows that 2/3rds of predictions are 0, which makes sense—if a respondent is offered 10 iterations of 3 possible choices, that would be 30 total choices, but they can only choose one option per iteration, so 20 choices (or 20/30 or 2/3) wouldn’t be selected. The other three groups are each 11%, since that’s the remaining 33% divided evenly across three options. Neat, I guess, but still not super helpful.

avg_predictions(model_minivans_categorical_brms)

##

## Group Estimate 2.5 % 97.5 %

## 0 0.667 0.657 0.676

## 1 0.109 0.103 0.115

## 2 0.112 0.105 0.118

## 3 0.113 0.106 0.119

##

## Columns: group, estimate, conf.low, conf.highInstead of going through the manual process of matrix-multiplying a dataset of some mix of products with a single set of coefficients, we can use predictions(..., type = "link") to get predicted values on the log-odds scale, or that utility value that we found before.

marginaleffects::predictions() vs. {tidybayes} functions

We can actually use either marginaleffects::predictions() or {tidybayes}’s *_draw() functions for these posterior predictions. They do the same thing, with slightly different syntax:

# Logit-scale predictions with marginaleffects::predictions()

model_minivans_categorical_brms %>%

predictions(newdata = example_product_mix, re_formula = NA, type = "link") %>%

posterior_draws()

# Logit-scale predictions with tidybayes::add_linpred_draws()

model_minivans_categorical_brms %>%

add_linpred_draws(newdata = example_product_mix, re_formula = NA)Earlier in the chocolate example, I used marginaleffects::predictions() with the Bayesian {brms} model. Here I’m going to switch to the {tidybayes} prediction functions instead, in part because these multinomial models with the categorical() family are a lot more complex (though {marginaleffects} can handle them nicely), but mostly because in the actual paper I’m working on with real conjoint data, our MCMC results were generated with raw Stan code through rstan, and {marginaleffects} doesn’t support raw Stan models.

Check out this guide for the differences between {tidybayes}’s three general prediction functions: predicted_draws(), epred_draws(), and linpred_draws().

Additionally, we now actually have 4,000 draws in 3 categories (option 1, option 2, and option 3), so we actually have 12,000 sets of coefficients (!). To take advantage of the full posterior distribution of these coefficients, we can calculate shares within each set of draws within each of the three categories, resulting in a distribution of shares rather than single values.

draws_df <- example_product_mix %>%

add_linpred_draws(model_minivans_categorical_brms, value = "utility", re_formula = NA)

shares_df <- draws_df %>%

# Look at each set of predicted utilities within each draw within each of the

# three outcomes

group_by(.draw, .category) %>%

mutate(share = exp(utility) / sum(exp(utility))) %>%

ungroup() %>%

mutate(

mix_type = paste(seat, cargo, eng, price, sep = " "),

mix_type = fct_reorder(mix_type, share)

)We can summarize this huge dataset of posterior shares to get medians and credible intervals, but we need to do one extra step first. Right now, we have three predictions for each mix type, one for each of the categories (i.e. option 1, option 2, and option 3.

shares_df %>%

group_by(mix_type, .category) %>%

median_qi(share)

## # A tibble: 18 × 8

## mix_type .category share .lower .upper .width .point .interval

## <fct> <fct> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 6 2ft elec 40 1 0.0161 0.0123 0.0208 0.95 median qi

## 2 6 2ft elec 40 2 0.0238 0.0183 0.0302 0.95 median qi

## 3 6 2ft elec 40 3 0.0216 0.0168 0.0275 0.95 median qi

## 4 7 2ft hyb 35 1 0.0513 0.0400 0.0647 0.95 median qi

## 5 7 2ft hyb 35 2 0.0485 0.0379 0.0605 0.95 median qi

## 6 7 2ft hyb 35 3 0.0532 0.0424 0.0660 0.95 median qi

## 7 7 3ft gas 40 1 0.0693 0.0543 0.0876 0.95 median qi

## 8 7 3ft gas 40 2 0.0891 0.0705 0.111 0.95 median qi

## 9 7 3ft gas 40 3 0.0775 0.0615 0.0967 0.95 median qi

## 10 7 2ft hyb 30 1 0.117 0.0976 0.139 0.95 median qi

## 11 7 2ft hyb 30 2 0.107 0.0891 0.128 0.95 median qi

## 12 7 2ft hyb 30 3 0.124 0.104 0.146 0.95 median qi

## 13 8 2ft gas 30 1 0.326 0.292 0.363 0.95 median qi

## 14 8 2ft gas 30 2 0.314 0.282 0.348 0.95 median qi

## 15 8 2ft gas 30 3 0.299 0.267 0.331 0.95 median qi

## 16 6 2ft gas 30 1 0.418 0.380 0.457 0.95 median qi

## 17 6 2ft gas 30 2 0.416 0.379 0.454 0.95 median qi

## 18 6 2ft gas 30 3 0.423 0.387 0.462 0.95 median qiSince those options were all randomized, we can lump them all together as a single choice. To do this we’ll take the average share across the three categories (this is also called “marginalizing”) within each posterior draw.

shares_marginalized <- shares_df %>%

# Marginalize across categories within each draw

group_by(mix_type, .draw) %>%

summarize(share = mean(share)) %>%

ungroup()

shares_marginalized %>%

group_by(mix_type) %>%

median_qi(share)

## # A tibble: 6 × 7

## mix_type share .lower .upper .width .point .interval

## <fct> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 6 2ft elec 40 0.0206 0.0173 0.0242 0.95 median qi

## 2 7 2ft hyb 35 0.0512 0.0435 0.0600 0.95 median qi

## 3 7 3ft gas 40 0.0788 0.0673 0.0915 0.95 median qi

## 4 7 2ft hyb 30 0.116 0.103 0.131 0.95 median qi

## 5 8 2ft gas 30 0.313 0.291 0.337 0.95 median qi

## 6 6 2ft gas 30 0.419 0.394 0.446 0.95 median qiAnd we can plot them:

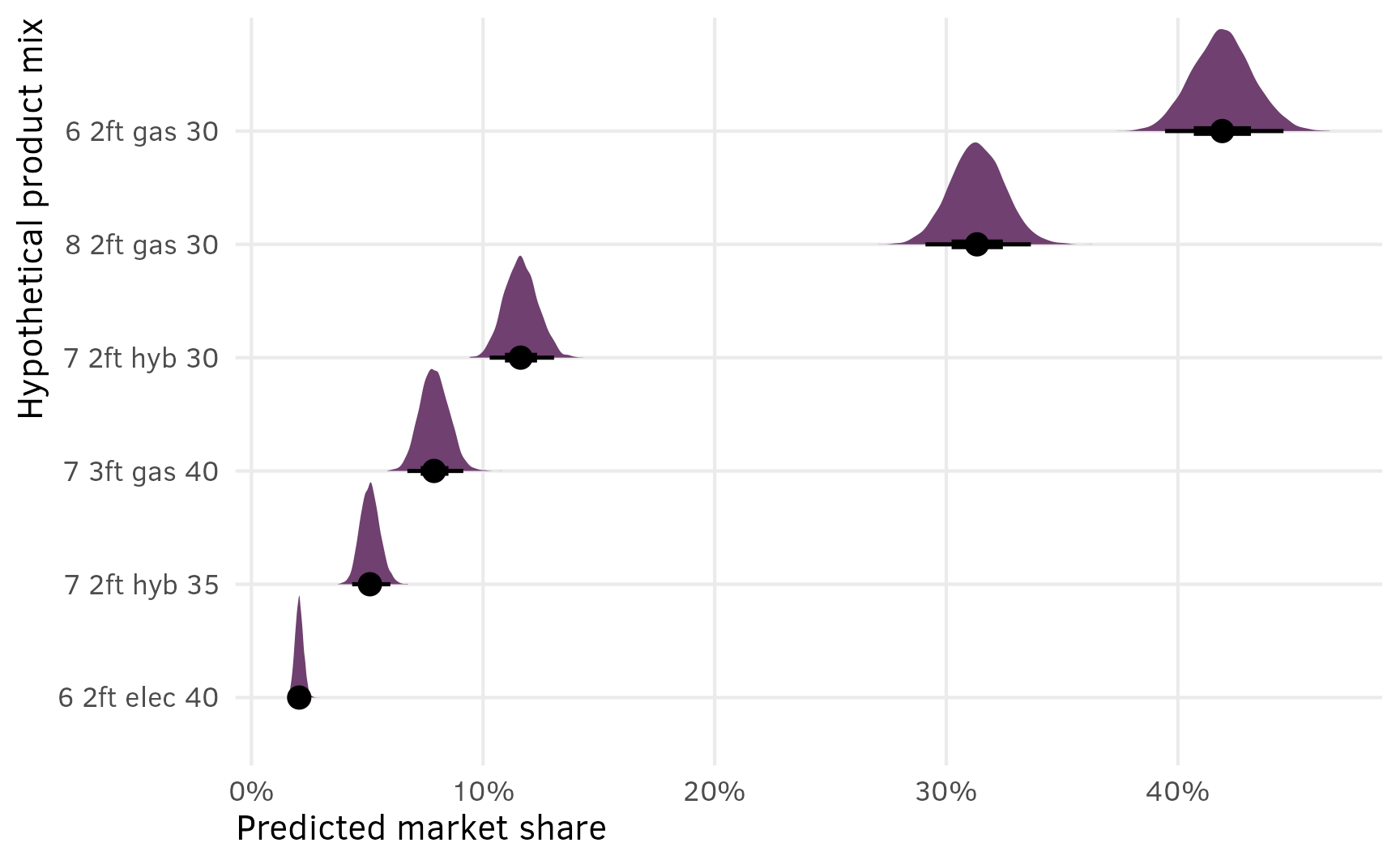

shares_marginalized %>%

ggplot(aes(x = share, y = mix_type)) +

stat_halfeye(fill = clrs[10], normalize = "xy") +

scale_x_continuous(labels = label_percent()) +

labs(x = "Predicted market share", y = "Hypothetical product mix")

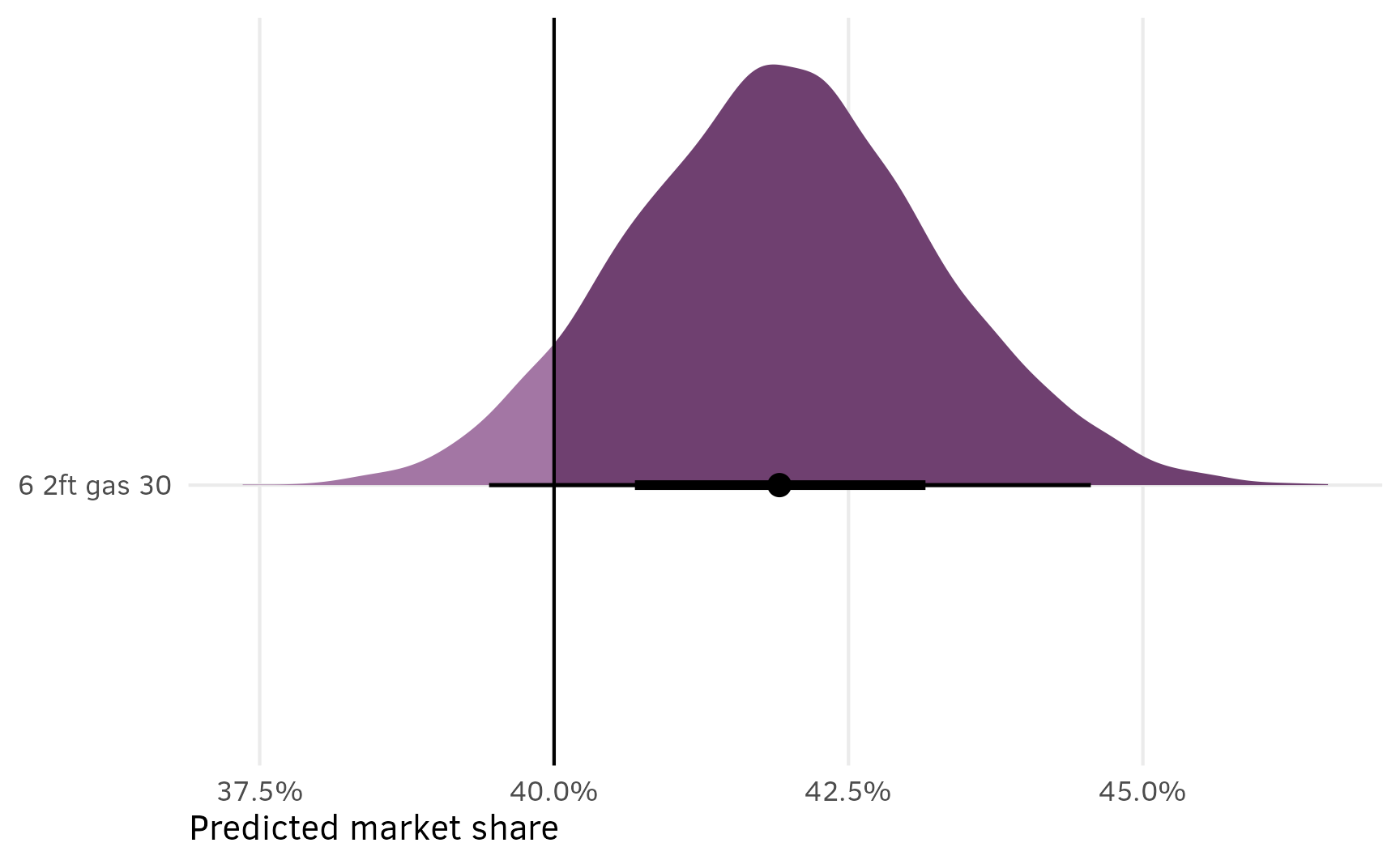

This is great because (1) it includes the uncertainty in the estimated shares, and (2) it lets us do neat Bayesian inference and say things like “there’s a 93% chance that in this market of 6 options, a $30,000 6-passenger gas minivan with 2 feet of storage would reach at least 40% market share”:

shares_marginalized %>%

filter(mix_type == "6 2ft gas 30") %>%

summarize(prop_greater_40 = sum(share >= 0.4) / n())

## # A tibble: 1 × 1

## prop_greater_40

## <dbl>

## 1 0.931

shares_marginalized %>%

filter(mix_type == "6 2ft gas 30") %>%

ggplot(aes(x = share, y = mix_type)) +

stat_halfeye(aes(fill_ramp = after_stat(x >= 0.4)), fill = clrs[10]) +

geom_vline(xintercept = 0.4) +

scale_x_continuous(labels = label_percent()) +

scale_fill_ramp_discrete(from = colorspace::lighten(clrs[10], 0.4), guide = "none") +

labs(x = "Predicted market share", y = NULL)

AMCEs

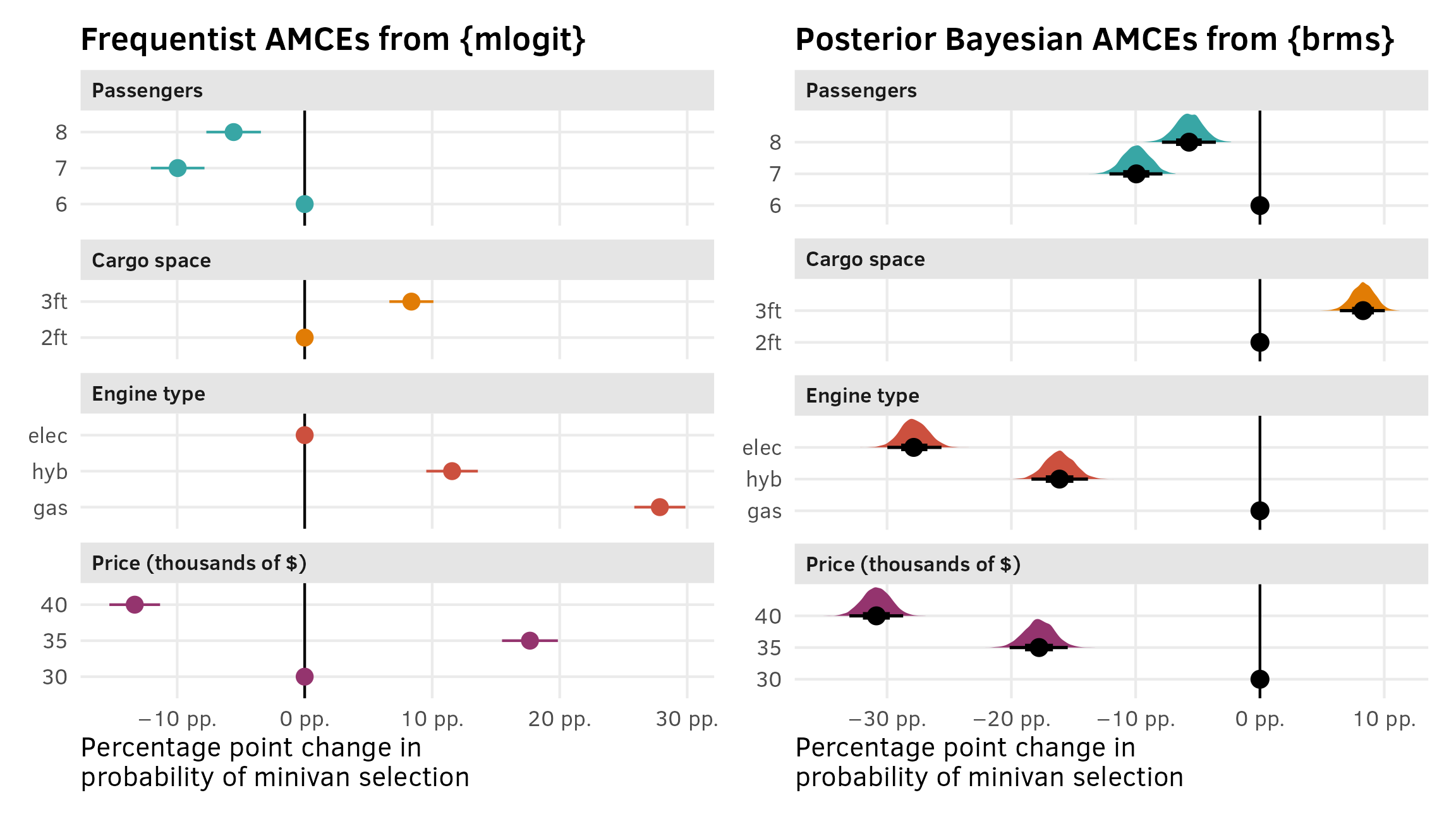

As explained in the AMCEs section for the chocolate data, in the social sciences we’re less concerned about predicted market shares and more concerned about causal effects. Holding all other features constant, what is the effect of a $5,000 increase in price or moving from 2 feet → 3 feet of storage space on the probability (or favorability) of selecting a minivan?

In the chocolate example, we were able to use marginaleffects::avg_comparisons() with the Bayesian model and get categorical contrasts automatically. This was because we cheated and used a Poisson model, since those can secretly behave like multinomial models. For the frequentist {mlogit}-based model, we had to use marginaleffects::predictions() instead and specify a special by argument to collapse the predictions into the different contrasts we were interested in.

In this case, since both the frequentist and Bayesian models for minivans are true multinomial models, we have to return to {marginaleffects}’s special syntax that lets us pass a data frame as the by argument.

Frequentist comparisons/contrasts

To help with the intuition behind this, since it’s more complex this time, we’ll first create a data frame with all 54 combinations of all the feature levels (3 seats × 2 cargos × 3 engines × 3 prices) and create a by column label that concatenates all the labels together so there’s a single unique label for each row:

by_all_combos <- minivans %>%

tidyr::expand(seat, cargo, eng, price) %>%

mutate(by = paste(seat, cargo, eng, price, sep = "_"))

by_all_combos

## # A tibble: 54 × 5

## seat cargo eng price by

## <fct> <fct> <fct> <fct> <chr>

## 1 6 2ft gas 30 6_2ft_gas_30

## 2 6 2ft gas 35 6_2ft_gas_35

## 3 6 2ft gas 40 6_2ft_gas_40

## 4 6 2ft hyb 30 6_2ft_hyb_30

## 5 6 2ft hyb 35 6_2ft_hyb_35

## 6 6 2ft hyb 40 6_2ft_hyb_40

## 7 6 2ft elec 30 6_2ft_elec_30

## 8 6 2ft elec 35 6_2ft_elec_35

## 9 6 2ft elec 40 6_2ft_elec_40

## 10 6 3ft gas 30 6_3ft_gas_30

## # ℹ 44 more rowsWe can then feed this by_all_combos data frame into predictions(), which will generate predictions for all these levels collapsed by all three of the possible groups (i.e. option 1, option 2, and option 3). We can then split the by column back into separate columns for each of the feature levels so that we have those original columns back.

all_preds_mlogit <- predictions(

model_minivans_mlogit,

# predictions.mlogit() weirdly gets mad when working with tibbles :shrug:

by = as.data.frame(by_all_combos)

) %>%

# Split the `by` column up into separate columns

separate(by, into = c("seat", "cargo", "eng", "price"))

as_tibble(all_preds_mlogit)

## # A tibble: 54 × 10

## estimate std.error statistic p.value conf.low conf.high seat cargo eng price

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <chr> <chr> <chr> <chr>

## 1 0.348 0.0135 25.8 1.89e-146 0.321 0.374 6 2ft elec 30

## 2 0.173 0.00907 19.0 7.82e- 81 0.155 0.190 6 2ft elec 35

## 3 0.0872 0.00565 15.4 1.04e- 53 0.0761 0.0983 6 2ft elec 40

## 4 0.695 0.0122 57.0 0 0.671 0.719 6 2ft gas 30

## 5 0.491 0.0147 33.3 1.08e-243 0.462 0.520 6 2ft gas 35

## 6 0.311 0.0130 23.8 1.40e-125 0.285 0.336 6 2ft gas 40

## 7 0.499 0.0138 36.3 3.76e-288 0.472 0.526 6 2ft hyb 30

## 8 0.300 0.0126 23.8 8.97e-125 0.275 0.324 6 2ft hyb 35

## 9 0.161 0.00952 16.9 3.90e- 64 0.142 0.180 6 2ft hyb 40

## 10 0.430 0.0147 29.2 2.13e-187 0.402 0.459 6 3ft elec 30

## # ℹ 44 more rowsThere are a lot of predicted probabilities here, so we need to collapse and average these by groups to make any sense of them. For instance, suppose we’re interested in the AMCE of cargo space. We can first find the average predicted probability of selection with some grouping and summarizing:

Holding all other features constant, the average probability (or average favorability, or average market share, or whatever we want to call it) of selecting a minivan with 2 feet of storage space is 0.292 (this is the average of the 27 predictions from all_preds_mlogit where cargo = 2ft); the average probability for a minivan with 3 feet of storage space is 0.375 (again, this is the average of the 27 predictions from all_preds_mlogit where cargo = 3ft). There’s an 8.3 percentage point difference between these groups. This is the causal effect or AMCE: switching from 2 feet to 3 feet increases minivan favorability by 8 percentage points on average.

This manual calculation works, but it’s tedious and doesn’t include anything about standard errors. So instead, we can do it automatically with predictions(..., by = data.frame(...)).

preds_minivan_cargo_mlogit <- predictions(

model_minivans_mlogit,

by = data.frame(

cargo = levels(minivans$cargo),

by = levels(minivans$cargo)

)

)

preds_minivan_cargo_mlogit

##

## Estimate Std. Error z Pr(>|z|) 2.5 % 97.5 % By

## 0.291 0.00440 66.2 <0.001 0.283 0.300 2ft

## 0.375 0.00441 85.2 <0.001 0.367 0.384 3ft

##